The Key Stages of Database Schema Design

Database schema design functions as a vital development step for database applications, optimizing data organization and accessibility while ensuring long-term database application maintainability. A systematic method must be followed to achieve data integrity, optimal performance, and scalability.

Database schema design depends heavily on the modern innovations that DevOps has introduced. The standard implementation now includes continuous integration and continuous delivery/deployment (CI/CD) practices and schema versioning, together with backward compatibility tools.

The article examines relational database schema design through its design stages and entity-relationship (ER) modeling and normalization processes. Implementing data security measures, together with consistent naming conventions, performance optimization techniques, and constraints, helps developers create efficient systems that scale well and remain easy to maintain. Post-design monitoring stands as a crucial factor in achieving system effectiveness.

Summary of database schema design key concepts

Concept | Description |

|---|---|

Entity-relationship model | A model is a visual representation of the entities, primary keys (PKs), foreign keys (FKs), and relationships among tables. |

Database design stages | An industry-standard set of steps is used to create a full database application, from conceptual design to logical and physical design, all aimed at optimizing performance. |

Normalization in database schema design | Normalization reduces redundancy and ensures data integrity by organizing tables into smaller, efficient versions using normal forms (NFs): first normal form (1NF), second normal form (2NF), third normal form (3NF), Boyce-Codd normal form (BCNF), fourth normal form (4NF), and fifth normal form (5NF). |

Types of relational database schemas | Common relational schemas include the relational, star, and snowflake models. |

Database schema design best practices | -Secure data with encryption, access control, and views. -Use consistent naming for tables, columns, and keys. -Document schema structure and design choices. -Use constraints for data integrity. -Optimize data types and performance. -Implement CI/CD and schema version control. |

Monitoring and observability | Post-design monitoring helps identify inefficiencies and optimize performance, providing real-time insights to enhance system effectiveness. |

Entity-relationship model

The ER model functions as an essential design instrument for development, enabling users to create visual representations of data structures that clearly show their relationships. This leads to simpler database development through an organized framework for understanding data structures and their interactions.

The key concepts in the ER model are as follows:

- Entities represent essential, real-world objects important to the system, such as courses or students.

- Attributes define the characteristics and relationships of each entity. For instance, the students entity requires two attributes, name and email, to describe its characteristics.

- Relationships are used in the system to establish connections between two or more entities within its structure. The teachers entity maintains a relationship with the courses entity through which the teacher teaches a course.

- A PK uniquely identifies each instance of an entity to prevent duplicatation. The student_id field functions as the PK for students because it distinguishes each student from others.

- An FK functions as an attribute that enables two tables to connect with each other. The courses entity has a teacher_id field functioning as an FK to establish a connection with the teachers entity.

- Cardinality is defined by the number of occurrences of one entity that can be related to a single occurrence of another entity. There are three main ways entities can be associated (cardinality types): one-to-one, one-to-many, and many-to-many. For instance, we have a one-to-many relationship between teachers and courses, because one teacher can teach several courses, but each course is taught by only one teacher.

Database design stages

Database design comprises three essential stages: conceptual design, followed by logical design, then physical design. These three stages concentrate on separate aspects of creating efficient data structures, working together to maintain data integrity while reducing redundancy and maximizing performance.

Conceptual design

Database design begins with the conceptual design phase. It focuses on understanding business requirements and creating the overall database structure. Designers use this phase to discover essential system entities and their relationships and constraints before developing a high-level data model that excludes implementation details. The goal of this phase is to create a conceptual model that uses ER diagrams (ERDs) to depict fundamental data elements supporting organizational objectives.

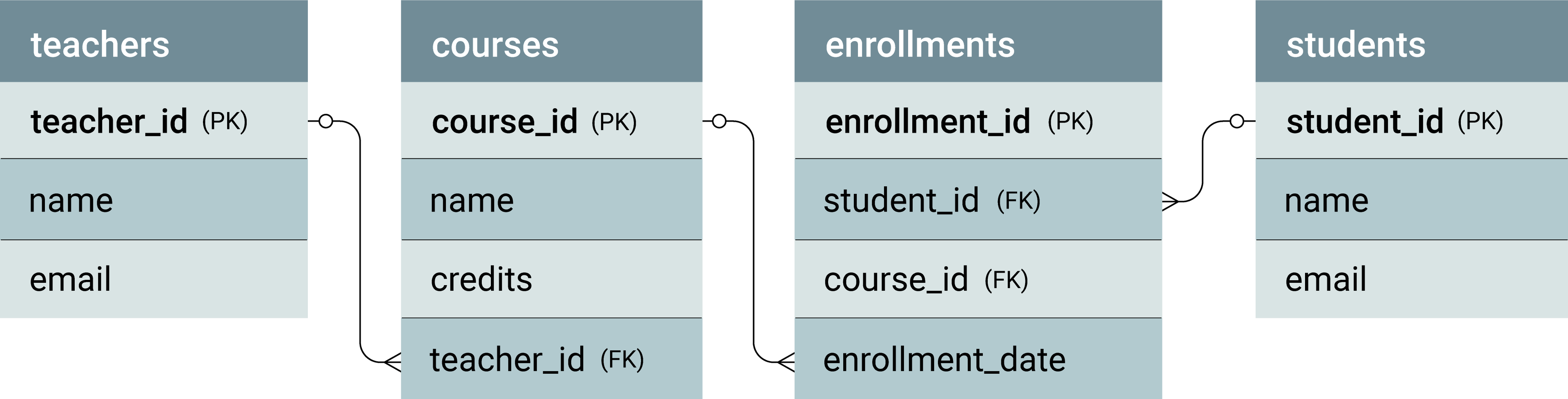

The diagram below illustrates a conceptual data model that provides a high-level overview of the key entities and their relationships.

Logical design

The logical design phase functions as a bridge between the conceptual and physical design stages in database development. The phase converts general models into detailed structures, focusing on data organization, linkages, and constraints. The process exists independently of a specific database management system (DBMS) and is commonly depicted through ERDs.

This phase maps entities to tables and relationships to FKs and defines tables, fields, views, and integrity constraints. The logical design clarifies how data entities relate to each other and provides a blueprint for the physical implementation of the database.

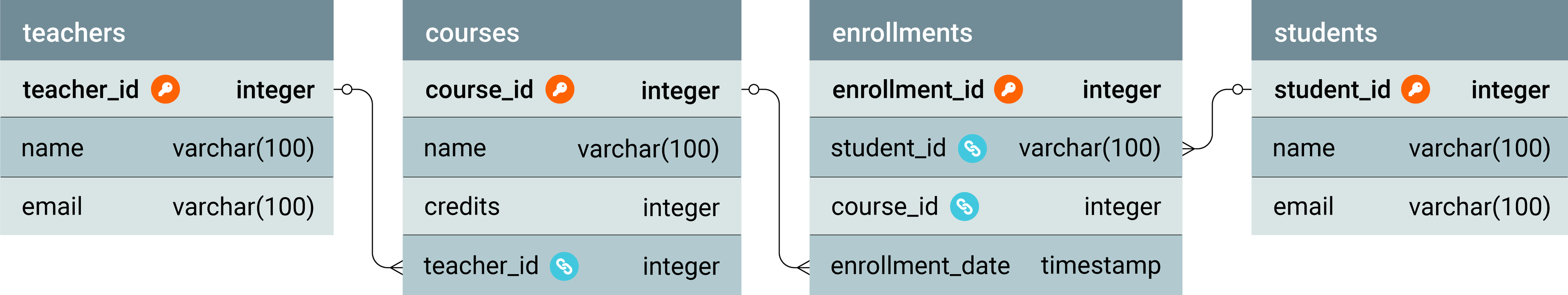

The following diagram illustrates the logical design, showcasing the relationships among teachers, courses, enrollments, and students tables.

Physical design

The physical design stage translates the abstract concepts from the logical data model into concrete details for a specific DBMS. It includes decisions about data types, indexing, storage structures, and performance optimization strategies tailored for the chosen DBMS. Additional elements, such as triggers, stored procedures, and platform-specific considerations, are also incorporated.

This stage focuses on ensuring the database is efficient and scalable while adhering to the limitations of the DBMS, such as naming conventions and reserved words. Physical design is the most technical phase, where the database is optimized for performance and efficient data access, much like organizing a library for easy retrieval.

The diagram below represents the physical design of our sample database, highlighting the structure of tables, PKs, FKs, and the data types for teachers, courses, enrollments, and students.

Normalization in database schema design

Database design uses normalization as a method to organize data structures, which minimizes both data duplication and dependencies. The process of dividing large tables into smaller, more efficient ones through relationship establishment prevents update, insert, and delete anomalies. These rules are followed through processes called NFs. They help maintain data consistency and make the database structure more efficient and reliable. Here, we focus on the NFs most relevant to our topic: 1NF, 2NF, 3NF, BCNF, 4NF, and 5NF.

First normal form

The data in 1NF is arranged in tables where each column contains atomic values. It means they cannot be divided into smaller parts. This form eliminates any repeating data groups, ensuring each attribute contains only a single value.

The following table illustrates unnormalized data in a circus environment, containing information about animals, their assigned tents, handlers, and the tricks they have learned. Each animal can perform several tricks, and trick data is stored across multiple repeating columns.

circus_info table

animal_nbr | animal_name | tent_nbr | tent_name | tent_location | handler_name | trick_nbr1 |

|---|---|---|---|---|---|---|

1 | Monkey | 101 | Big Top | North Wing | Clara | T01 |

2 | Lion | 102 | Center Ring | East Wing | Hank | T03 |

3 | Elephant | 103 | Jumbo Dome | South Wing | Mira | T05 |

trick_name1 | trick_learned_at1 | trick_skill_level1 | trick_nbr2 | trick_name2 | trick_learned_at2 | trick_skill_level2 |

|---|---|---|---|---|---|---|

Juggle | 2023-01-10 | Intermediate | T02 | Balance | 2023-02-15 | Beginner |

Roar on Clue | 2023-03-20 | Advanced | T04 | Leap Through Fire | 2023-03-25 | Expert |

Spray Water | 2023-04-05 | Expert | T06 | Headstand | 2023-04-10 | Intermediate |

This table contains repeating groups of columns (e.g., trick_nbr1 and trick_nbr2) and nonatomic values, violating 1NF. To resolve this, we decompose the table into two separate ones: circus_animals and tricks, where each trick becomes its own row.

circus_animals table

animal_nbr | animal_name | tent_nbr | tent_name | tent_location |

|---|---|---|---|---|

1 | Monkey | 101 | Big Top | North Wing |

2 | Lion | 102 | Center Ring | East Wing |

3 | Elephant | 103 | Jumbo Dome | South Wing |

tricks table

| animal_nbr | trick_nbr | trick_name | trick_learned_at | trick_skill_level |

|---|---|---|---|---|

1 | T01 | Juggle | 2023-01-10 | Intermediate |

1 | T02 | Balance | 2023-02-15 | Beginner |

2 | T03 | Roar on Cue | 2023-03-20 | Advanced |

2 | T04 | Leap Through Fire | 2023-03-25 | Expert |

3 | T05 | Spray Water | 2023-04-05 | Expert |

3 | T06 | Headstand | 2023-04-10 | Intermediate |

The tricks table is introduced to separate trick-related information, linking it to the circus_animals table through the trick_nbr field, thereby eliminating redundancy.

Second normal form

In 2NF, the table must already be in 1NF, and all nonkey attributes must be fully dependent on the PK. It addresses partial dependencies by splitting the data into separate tables, each with a PK uniquely identifying the records.

In the tricks table, trick_name is dependent only on trick_nbr, not the full (animal_nbr, trick_nbr) key. Therefore, we separate the static trick data into its own table.

tricks table

trick_nbr | trick_name |

|---|---|

T01 | Juggle |

T02 | Balance |

T03 | Roar on Cue |

T04 | Leap Through Fire |

T05 | Spray Water |

T06 | Headstand |

animal_tricks table

| animal_nbr | trick_nbr | trick_learned_at | trick_skill_level |

|---|---|---|---|

1 | T01 | 2023-01-10 | Intermediate |

1 | T02 | 2023-02-15 | Beginner |

2 | T03 | 2023-03-20 | Advanced |

2 | T04 | 2023-03-25 | Expert |

3 | T05 | 2023-04-05 | Expert |

3 | T06 | 2023-04-10 | Intermediate |

Third normal form

In 3NF, the table is in 2NF, and no nonkey attribute is transitively dependent on the PK. This form removes transitive dependencies (fields dependent on the PK through another nonkey attribute) by breaking the table into multiple tables based on functional dependencies.

In the circus_animals table, tent_name and tent_location depend on tent_nbr, not directly on animal_nbr, which introduces a transitive dependency. We remove this by placing tent-related information on a separate table. The outcome after applying 3NF is presented below.

circus_animals table

animal_nbr | animal_name | tent_nbr |

|---|---|---|

1 | Monkey | 101 |

2 | Lion | 102 |

3 | Elephant | 103 |

locations table

tent_nbr | tent_name | tent_location |

|---|---|---|

101 | Big Top | North Wing |

102 | Center Ring | East Wing |

103 | Jumbo Dome | South Wing |

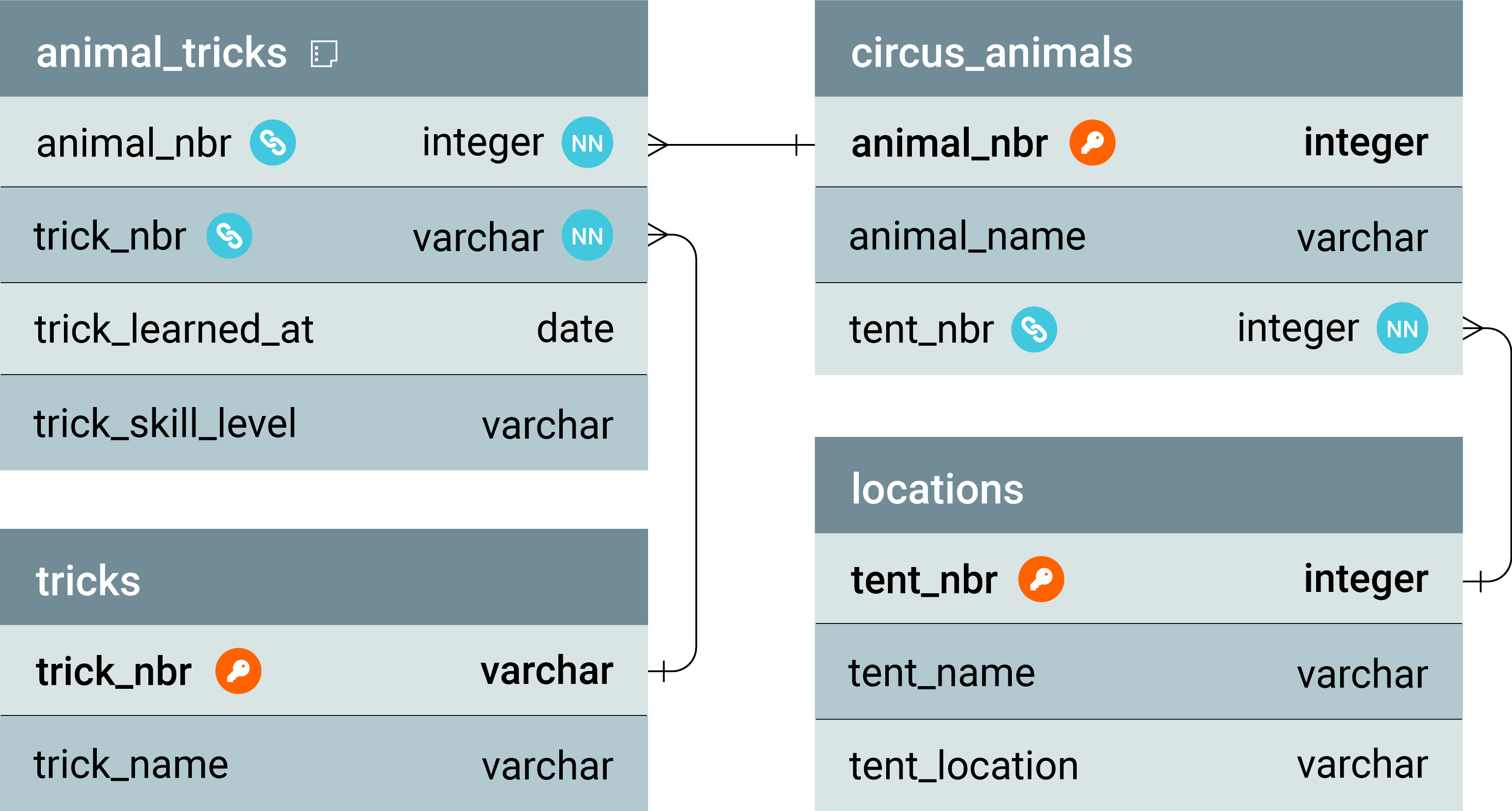

This is the ERD representing the relationships between animal_tricks, circus_animals, tricks, and locations in the organization.

Boyce-Codd normal form

BCNF is an extended version of 3NF, which makes sure every determinant (an attribute that functionally determines another attribute) is a candidate key. While 3NF is generally effective in organizing and reducing redundancy, it may not fully eliminate all of it. Specifically, redundancy can still occur when a noncandidate key determines another attribute in a way that violates the candidate key rule. BCNF solves this problem, as it is a stricter version of 3NF.

For example, in a scenario where animals are assigned to tents and each tent has a single handler, but multiple animals can be housed in the same tent, a new table is created.

tent_handler table

tent_nbr | tent_name | tent_location | handler_name |

|---|---|---|---|

101 | Big Top | North Wing | Clara |

102 | Center Ring | East Wing | Hank |

103 | Jumbo Dome | South Wing | Mira |

Here, tent_nbr is the determinant of handler_name, and since tent_nbr is a candidate key in the tents table, this satisfies BCNF. This eliminates redundancy by ensuring each determinant uniquely defines the data.

Fourth normal form

In 4NF, multi-valued dependencies are eliminated by ensuring independent attributes are stored separately. If multiple independent values exist for a key, they should be split into different tables.

Consider a table where an employee has multiple skills and certifications, leading to redundancy. If an animal can have multiple toys and trainers, and these are independent of each other, they should be stored in separate tables.

animal_toys table

animal_nbr | toy |

|---|---|

1 | Ball |

1 | Rope |

2 | Fire Ring |

3 | Water Bucket |

3 | Hat |

animal_trainers table

animal_nbr | trainer_name |

|---|---|

1 | Alice |

1 | Ben |

2 | Hank |

3 | Mira |

3 | Dave |

Fifth normal form

With 5NF, data is decomposed into smaller tables only when necessary to remove redundancy, thereby preventing the occurrence of lossless joins. This form is applied when there are several complex many-to-many relationships that can be split further.

For example, if animals perform acts at various venues, we can store them in a single table—but this introduces redundancy.

animal_acts_venues table

animal_nbr | act_id | venue_id |

|---|---|---|

1 | A01 | V01 |

1 | A02 | V02 |

2 | A03 | V02 |

3 | A04 | V01 |

3 | A05 | V03 |

Decomposing it into separate tables produces the following two.

animal_acts table

animal_nbr | act_id |

|---|---|

1 | A01 |

1 | A02 |

2 | A03 |

3 | A04 |

3 | A05 |

acts_venues table

act_id | venue_id |

|---|---|

A01 | V01 |

A02 | V02 |

A03 | V02 |

A04 | V01 |

A05 | V03 |

This decomposition removes redundancy and maintains data consistency without introducing anomalies.

Types of relational database schemas

Database design uses schemas to establish how data should be organized and structured in a database system. Different schema types exist to meet the particular requirements of use cases and performance optimization needs. This section examines three fundamental database schema types: relational schemas, star schemas, and snowflake schemas.

Relational schemas

A relational schema defines the table structure of a database through relations, which organize data attributes with their respective domains. The schema defines integrity constraints through PKs for record uniqueness, FKs for table relationships, and constraints such as NOT NULL and UNIQUE for data validation. The blueprint defines table structure and relationships but does not contain actual database data.

Developers use relational schemas to structure databases efficiently, minimizing redundancy and ensuring data integrity. They are commonly used in operational databases to store structured data in tables with established relationships.

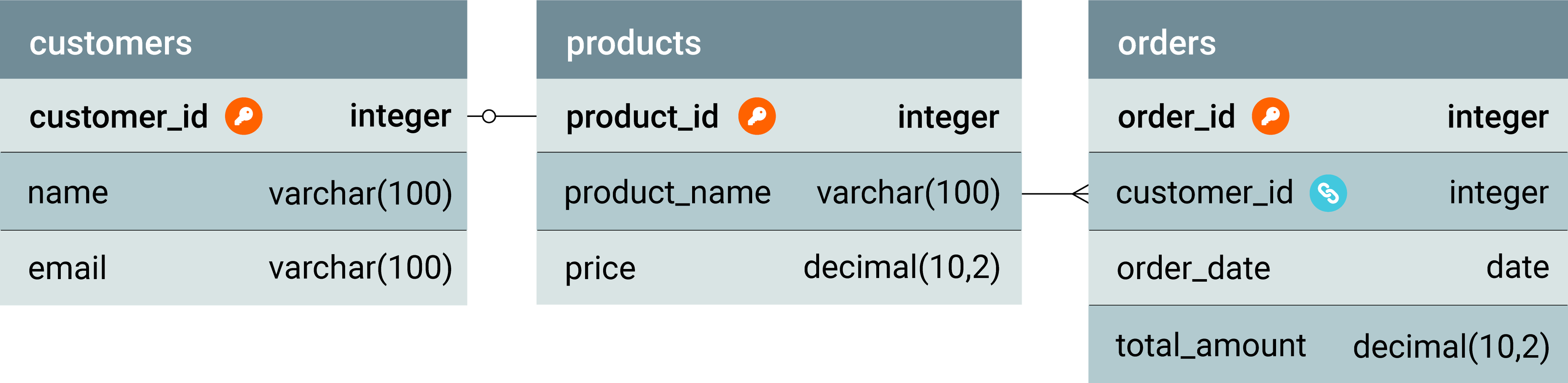

In the following example of a relational schema represented through SQL code, tables for customers, orders, and products have been created, with relationships established through FKs. The code is followed by an ERD illustrating the relationships among customers, orders, and products.

CREATE TABLE customers (

customer_id INTPRIMARY KEY

name VARCHAR(100),

email VARCHAR(100)

,

);

CREATE TABLE products (

product_id INT PRIMARY KEY,

product_name VARCHAR(100),

price DECIMAL(10, 2)

);

CREATE TABLE Orders (

order_id INT PRIMARY KEY,

customer_id INT,

order_date DATE,

total_amount DECIMAL(10, 2),

FOREIGN KEY (customer_id) REFERENCES customers(customer_id)

);

Star schemas

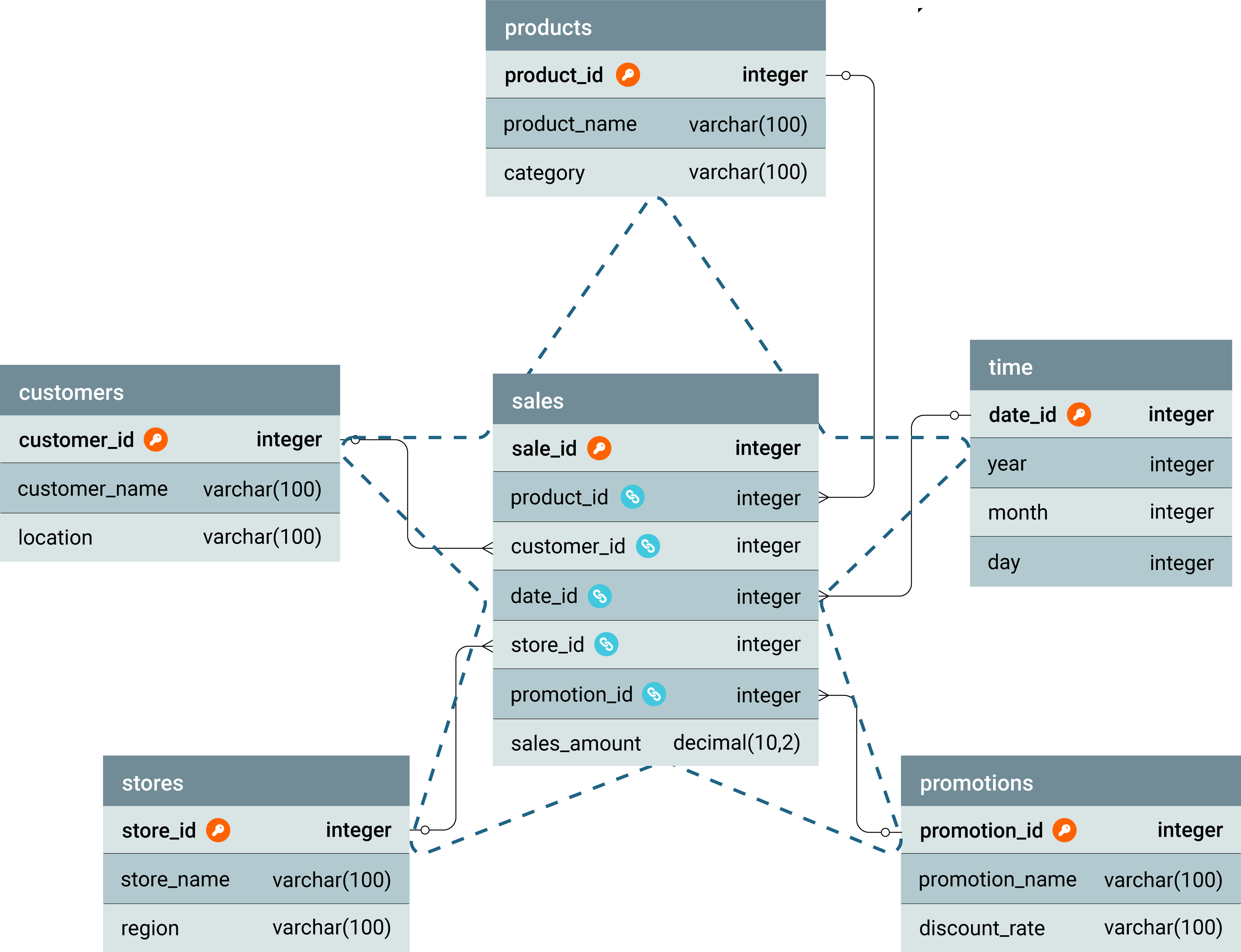

A star schema is a data modeling method commonly applied in data warehousing to structure data in a straightforward and easy-to-understand manner. It includes a central fact table holding numerical data, with surrounding dimension tables detailing the characteristics of these data points. The fact table is connected to the dimension tables via FKs, creating a star-shaped pattern when visualized. This design helps enhance the efficiency of querying and analysis by distinguishing between descriptive attributes and numerical data.

The following code creates a star schema for sales data, where the fact table (sales) contains the numerical measure (sales_amount) and the dimension tables provide descriptive attributes (such as products, customers, time, stores, and promotions information). These tables are interconnected using FKs to enable efficient querying and analysis.

CREATE TABLE Orders (

sale_id INT PRIMARY KEY,

product_id INT,

customer_id INT,

date_id INT,

sales_amount DECIMAL(10, 2),

FOREIGN KEY (product_id) REFERENCES products(product_id),

FOREIGN KEY (customer_id) REFERENCES customers(customer_id),

FOREIGN KEY (date_id) REFERENCES time(date_id),

FOREIGN KEY (store_id) REFERENCES stores(store_id),

FOREIGN KEY (promotion_id) REFERENCES promotions(promotion_id)

);

CREATE TABLE products (

product_id INT PRIMARY KEY,

product_name VARCHAR(100),

category VARCHAR(100)

);

CREATE TABLE customers (

customer_id INT PRIMARY KEY,

customer_name VARCHAR(100),

location VARCHAR(100)

);

CREATE TABLE time (

date_id INT PRIMARY KEY,

year INT,

month INT,

day INT

);

CREATE TABLE stores (

store_id INT PRIMARY KEY,

store_name VARCHAR(100),

region VARCHAR(100)

);

CREATE TABLE promotions (

promotion_id INT PRIMARY KEY,

promotion_name VARCHAR(100),

discount_rate DECIMAL(5,2)

);

Snowflake schemas

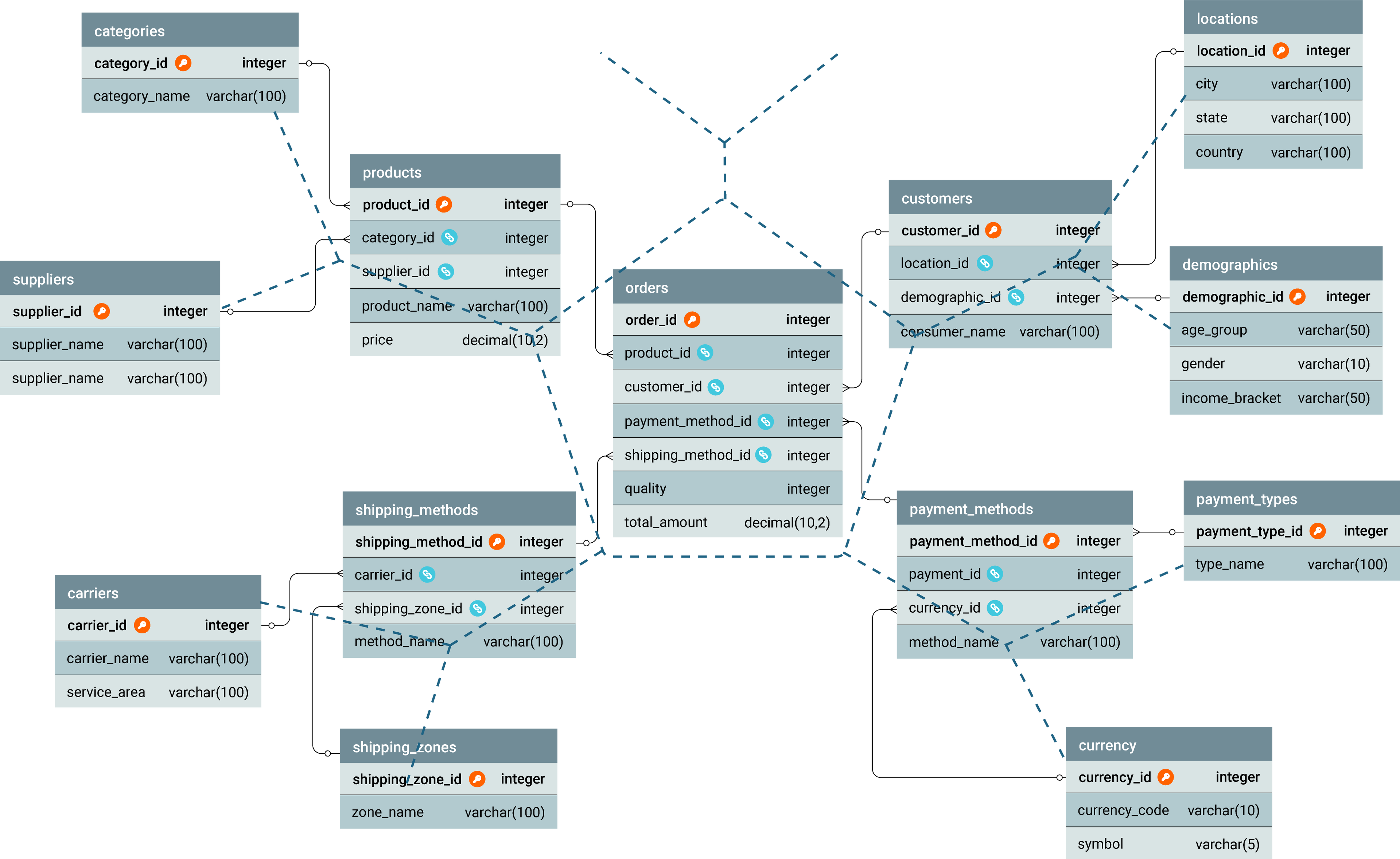

Data warehousing uses the snowflake schema as a data modeling technique to enhance the performance of large data queries. The snowflake schema extends the star schema design by connecting the central fact table to multiple dimension tables. The snowflake schema differs from the star schema because it applies normalization to dimension tables through subtable segmentation, which reduces data duplication. The dimension tables develop complex, multi-level relationships, resulting in a structure resembling a snowflake.

The schema achieves data consistency and reduces storage needs through dimension table normalization, which eliminates redundant data. The analytical processing benefits from this approach because it enables efficient propagation of dimension attribute updates and changes, which reduces inconsistencies. Normalized structures improve performance in large data environments by reducing data scan volume and enabling advanced indexing methods.

The following example illustrates a snowflake schema, where the sales fact table is connected to normalized dimension tables, including products, customers, payment_methods, and shipping_methods. These dimensions are further decomposed—for instance, products are linked to product_categories and suppliers, while customers are connected to locations and demographics. This normalization reduces redundancy, improves data integrity, and supports more flexible analytical queries.

CREATE TABLE sales (

sale_id INT PRIMARY KEY,

product_id INT,

customer_id INT,

payment_method_id INT,

shipping_method_id INT,

quantity INT,

total_amount DECIMAL(10, 2),

FOREIGN KEY (product_id) REFERENCES products(product_id),

FOREIGN KEY (customer_id) REFERENCES customers(customer_id),

FOREIGN KEY (payment_method_id)REFERENCES payment_methods(payment_method_id),

FOREIGN KEY (shipping_method_id)REFERENCES shipping_methods(shipping_method_id),

);

CREATE TABLE products (

product_id INT PRIMARY KEY,

product_category_id INT,

supplier_id INT,

product_name VARCHAR(100),

price DECIMAL(10, 2),

FOREIGN KEY (product_category_id) REFERENCES product_categories(product_category_id),

FOREIGN KEY (supplier_id) REFERENCES suppliers(supplier_id)

);

CREATE TABLE product_categories (

product_category_id INT PRIMARY KEY,

category_name VARCHAR(100)

);

CREATE TABLE suppliers (

supplier_id INT PRIMARY KEY,

supplier_name VARCHAR(100),

contact_email VARCHAR(100)

);

CREATE TABLE customers (

customer_id INT PRIMARY KEY,

location_id INT,

demographic_id INT,

customer_name VARCHAR(100),

FOREIGN KEY (location_id) REFERENCES locations(location_id),

FOREIGN KEY (demographic_id) REFERENCES demographics(demographic_id)

);

CREATE TABLE locations (

location_id INT PRIMARY KEY,

city VARCHAR(100),

state VARCHAR(100),

country VARCHAR(100)

);

CREATE TABLE demographics (

demographic_id INT PRIMARY KEY,

age_group VARCHAR(50),

gender VARCHAR(10),

income_bracket VARCHAR(50)

);

CREATE TABLE payment_methods (

payment_method_id INT PRIMARY KEY,

payment_type_id INT,

currency_id INT,

method_name VARCHAR(100),

FOREIGN KEY (payment_type_id) REFERENCES payment_types(payment_type_id),

FOREIGN KEY (currency_id) REFERENCES currency(currency_id)

);

CREATE TABLE payment_types (

payment_type_id INT PRIMARY KEY,

type_name VARCHAR(100)

);

CREATE TABLE currency (

currency_id INT PRIMARY KEY,

currency_code VARCHAR(10),

symbol VARCHAR(5)

);

CREATE TABLE shipping_methods (

shipping_method_id INT PRIMARY KEY,

carrier_id INT,

shipping_zone_id INT,

method_name VARCHAR(100),

FOREIGN KEY (carrier_id) REFERENCES carriers(carrier_id),

FOREIGN KEY (shipping_zone_id) REFERENCES shipping_zones(shipping_zone_id)

);

CREATE TABLE carriers (

carrier_id INT PRIMARY KEY,

carrier_name VARCHAR(100),

service_area VARCHAR(100)

);

CREATE TABLE shipping_zones (

shipping_zone_id INT PRIMARY KEY,

zone_name VARCHAR(100),

region_description VARCHAR(200)

);

Database schema design best practices

Schema integrity and security

Define constraints to maintain data integrity and ensure the accuracy, consistency, and reliability of the data stored in the database. Use constraints to prevent the insertion of invalid or inconsistent data, enforce rules that safeguard relationships between tables, and maintain adherence to business or data requirements.

Here are six key types of constraints that help maintain data integrity:

- PK: Ensures each row is uniquely identifiable

- FK: Enforces relationships between tables

- Unique: Prevents duplicate values in a column

- Default values: Assigns a default value if none is provided

- Nullability: Specifies whether a column can have null values

- Check types: Enforces valid data conditions in a column

Here is an example SQL for creating the orders table, incorporating these constraints:

CREATE TABLE orders (

-- Primary Key: Makes sure each order has a unique identifier

order_id INT PRIMARY KEY,

-- Foreign Key: Links to the Customers table (referential integrity)

customer_id INT,

-- Nullability: Ensures every order has a valid date (cannot be NULL)

order_date DATE NOT NULL,

-- Default Value: Automatically sets 'Pending' if not specified

order_status VARCHAR(20) DEFAULT 'Pending',

-- Data type: Stores the total value of the order as a decimal

order_total DECIMAL(10,2),

-- Unique: Ensures order_number has unique values across all rows

order_number VARCHAR(50) UNIQUE,

-- Foreign Key: Ensures valid customer reference

FOREIGN KEY (customer_id) REFERENCES customers(customer_id),

-- Check Constraint: Ensures the order total is always greater than 0

CHECK (order_total > 0)

);

Use encryption, data masking, and access control as part of a security strategy to protect sensitive data and maintain privacy:

- Encrypt sensitive data using industry-standard encryption methods, such as the Advanced Encryption Standard (AES) algorithm, to ensure data privacy.

SELECT AES_ENCRYPT(bank_card_number, 'encryption_key') AS encrypted_card_number

FROM Customers

WHERE customer_id = 1;

- Use data masking to display only the necessary portions of sensitive data, such as showing the last four digits of a bank card number.

SELECT CONCAT('XXXX-XXXX-XXXX-', RIGHT(bank_card_number, 4)) AS masked_card_number

FROM Customers;

- Grant users access to intermediate objects, such as views and stored procedures, instead of granting direct access to the base tables (see example below). This reduces the risk of unauthorized data access while still allowing users to perform necessary operations.

CREATE VIEW Order_summary AS

SELECT order_id, customer_id, order_total

FROM Orders

WHERE order_status = 'Pending';

-- Grant SELECT access on Order_summary view to reporting_user

GRANT SELECT ON Order_summary TO reporting_user;

Naming conventions and schema consistency

Database design becomes simpler when you follow clear naming conventions, which helps to ensure consistency. The following practices will help you improve readability, reduce confusion, and enhance long-term maintainability:

- Use consistent, descriptive names for tables and columns (avoid abbreviations). For example, customers (not cust), first_name (not f_name).

- Name PKs and FKs clearly, using the related table’s name followed by _id. For example, customer_id in orders (FK referencing customers).

- For many-to-many relationships, create well-structured junction tables with combined names. For example, product_orders with product_id and order_id columns.

- Use underscores or camel case instead of special characters or spaces. For example, order_status or OrderStatus (not Order Status or order@status).

- The schema should maintain consistency to improve both readability and maintenance ease. For instance, use product_name and the same data type in all relevant tables (e.g., orders and products).

Database schema documentation

Document the database schema thoroughly to ensure long-term clarity. Proper documentation helps new developers and database administrators quickly understand the design, making modifications and expansions easier.

Document the structure of tables, ERs, and design choices, such as constraints or data types. Include sample queries and use cases to demonstrate how to interact with the database, providing context and best practices for efficient querying.

Optimizing data types and performance

Database performance, together with storage efficiency and data accuracy, can be improved through proper data type optimization. The following best practices should be implemented:

- Select appropriate column data types to optimize storage, improve performance, ensure accuracy, and prevent future issues such as data truncation or inefficient queries.

- Avoid using data types with a MAX size, such as VARCHAR(MAX), to prevent performance issues. These types can lead to excessive memory allocation and reduce query efficiency due to their handling in tempdb .

- Avoid the use of GUID data types for clustered indexes. They introduce fragmentation and increased storage requirements, which can negatively impact query performance and index maintenance.

- Try to use data compression methods. They help reduce storage needs and enhance I/O performance, especially in large-scale environments such as data warehouses. Row and page compression reduce disk I/O, lower memory usage, improve query efficiency, and minimize resource contention, optimizing performance and storage costs.

Materialized views and refresh strategies

Use materialized views (also known as indexed views in some cases) to improve query performance by storing precomputed results. Implement strategies for periodically refreshing materialized views based on data changes to ensure they remain accurate and perform efficiently.

Transaction management and performance

Avoid complex transactions. They can create locking and contention issues, leading to performance bottlenecks and longer wait times for other operations.

Break down lengthy transactions to reduce locking issues, as this will improve performance. Use explicitly declared transactions with the BEGIN … COMMIT syntax to enhance locking efficiency and improve throughput for end users.

Schema management with DevOps

Implement DevOps practices, including CI/CD and schema versioning, to handle database objects and ensure effective source code management:

- Utilize versioning tools, such as Azure DevOps®, to monitor schema changes and ensure consistency between development and production environments.

- Design backward-compatible changes to allow both old and new schema versions to work together seamlessly.

- Use incremental migrations for small, low-risk updates, and automate data migration scripts to avoid downtime or data loss.

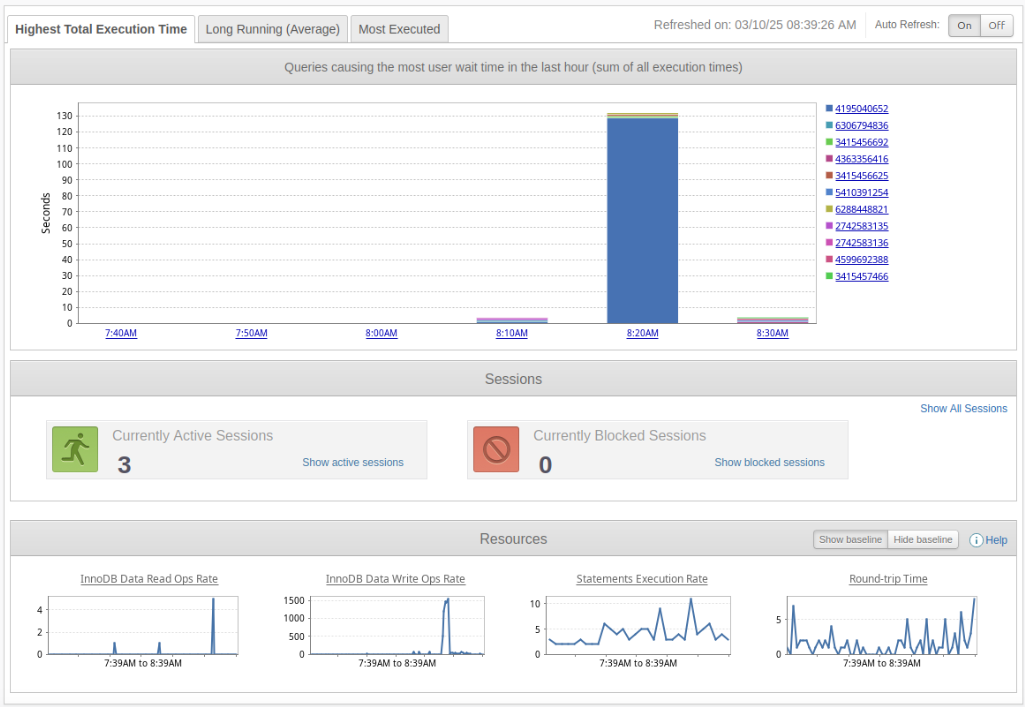

Monitoring and observability

Database schema quality and performance require post-design monitoring to ensure proper maintenance. Continuous monitoring of essential performance metrics helps identify potential bottlenecks, inefficiencies, and areas for optimization. Observability tools provide detailed performance insights into schema behavior under various workloads, proactively improving system efficiency.

SolarWinds delivers complete monitoring solutions, which provide immediate access to database performance metrics. Database administrators can use SolarWinds® Database Performance Analyzer to rapidly identify and resolve problems with slow queries, resource contention, and inefficient indexing. The early detection of performance bottlenecks enables prompt optimization, which maintains database schema performance throughout system development. The obtained insights enable better decision-making for future schema enhancements, leading to improved long-term database stability and scalability.

Last thoughts

A well-structured database schema is crucial for efficient data management and performance. Organizations can create scalable, maintainable systems by following best practices, such as clear naming conventions, proper data types, and effective versioning and migration strategies.

Tools such as SolarWinds Database Performance Analyzer enable continuous performance monitoring, helping to identify issues early and optimize performance. Regular monitoring and data-driven schema decisions help ensure the database supports business growth while minimizing risks and ensuring long-term success.