The Key Concepts of Database Optimization

Database optimization is the process of improving the performance, efficiency, and management of a database system. Quick retrieval and update times remain key priorities for engineering teams. The four key metrics data professionals use to measure the performance and reliability of their databases are:

- Latency

- Traffic

- Errors

- Saturation

When databases are not managed and scaled correctly, they can become slow due to various reasons, such as:

- Data volumes larger than their intended capacity

- Poor schema design

- Inefficient queries

- Inadequate performance optimization measures

Optimization of database management should be considered from the early stages of planning and throughout the life cycle of the database and the applications it supports.

In this article, we’ll review key relational database optimization techniques and provide practical examples to help ground the concepts into actionable recommendations.

Summary of key database optimization concepts

The table below summarizes the seven high-level database optimization concepts this article will explore in detail.

Concept | Description |

Performance metrics | Quantifiable measurements of database performance |

Indexing | Using indexes to improve query retrieval times |

Query optimization | Analyzing and improving the efficiency of database queries |

Normalization | Enforcing consistency in a database system to improve efficiency |

Caching | Improving response times by temporarily storing frequently accessed data |

Hardware and configuration | Upgrading the physical aspects of a system to improve performance |

Monitoring | Using monitoring tools to periodically analyze the efficiency of a database |

Performance metrics for database optimization

Implementing effective measurement criteria is the first step toward improving a database system's performance. Engineering teams monitor specific metrics throughout a database system's lifecycle to quickly identify potential bottlenecks or inefficiencies. When investigating performance issues or failures in a database, these metrics provide clues, giving engineers a better idea of the root cause.

The sections below explain the four golden signals of database reliability, which directly affect performance.

Latency

Latency is the time interval between executing a query and achieving its result. High latencies severely impact the user experience on customer-facing applications, and they tend to be one of the primary factors influencing consumer impressions. High latencies may indicate:

- Poor database optimization

- High network response times when querying remote servers

- Complex or inefficient queries

For example, let’s consider a database containing three tables: customers (100,000 rows), orders (2,000,000 rows), and products (500,000 rows).

The following PostgreSQL® query to retrieve a specific customer’s order details, on average, took about 5 seconds to complete:

SELECT o.order_id, o.order_date, p.product_name, c.customer_name

FROM orders o

JOIN products p ON o.product_id = p.product_id

JOIN customers c ON o.customer_id = c.customer_id

WHERE c.customer_id = 10221

ORDER BY o.order_date DESC;

However, when adding indexes, the response time for the same query was brought down to approximately 1 second, positively impacting user experience:

CREATE INDEX idx_orders_customer ON orders (customer_id);

CREATE INDEX idx_products_product_id ON products (product_id);

The SQL keywords EXPLAIN and ANALYZE can help engineers understand where the bottlenecks in their code are.

Traffic

Traffic refers to the volume of requests a database receives. Monitoring traffic 24/7 helps ensure the database is configured and scaled appropriately to handle demand. Traffic is typically quantified by metrics such as transactions per second (TPS) or queries per second (QPS). High levels of traffic can inflict stress on a database and expose bottlenecks. If a database system is not equipped to handle high traffic, it could result in severe performance degradation.

Social media and e-commerce servers are examples of applications that typically have higher traffic. Database monitoring platforms usually include traffic measuring tools to provide engineers with useful insights.

Some optimization techniques to prevent performance degradation due to high traffic include:

- Implementing batch processing to reduce the number of individual transactions to the database

- Using connection pooling to reduce the large connection overhead; for example, PgBouncer efficiently manages connections to a PostgreSQL database

- Using caching strategies to avoid unnecessarily requesting frequently used data

- Upgrading database hardware to boost TPS capability

Errors

Errors indicate the number of requests that failed or encountered unexpected responses. High error rates can indicate invalid queries, concurrency issues, or application bugs.

Some ways to optimize database systems to minimize high error rates include:

- Using circuit breakers to prevent multiple downstream failures

- Involving efficient retry mechanisms to combat transient errors, without overloading the system

- Including frequent error logging to be able to track and fix errors quickly

Saturation/resource utilization

Saturation measures the proximity of a database system to approaching its resource limitations. High CPU utilization is one of the main culprits for slowing down a database system, from a resource perspective.

CPU utilization indicates how efficiently the database system uses CPU resources. It is usually measured in percentages. The optimal CPU utilization exists at approximately 50% – 70%. Data professionals should expect performance degradation and higher response times as the utilization percentage rises, indicating the system is under heavy load. Standard performance improvement methods, such as indexing, scaling, and sharding (a technique used to distribute workloads across multiple servers), usually improve CPU utilization.

Other common resource utilization problems arise from issues with memory, file I/O speed, storage capacity, and network bandwidth.

Database monitoring tools simplify metrics collection

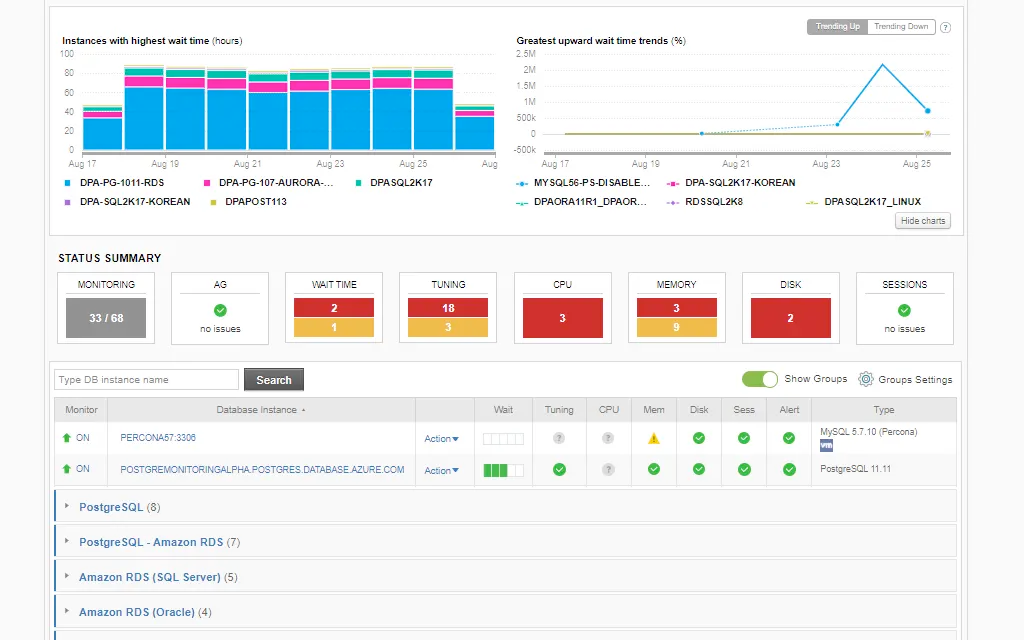

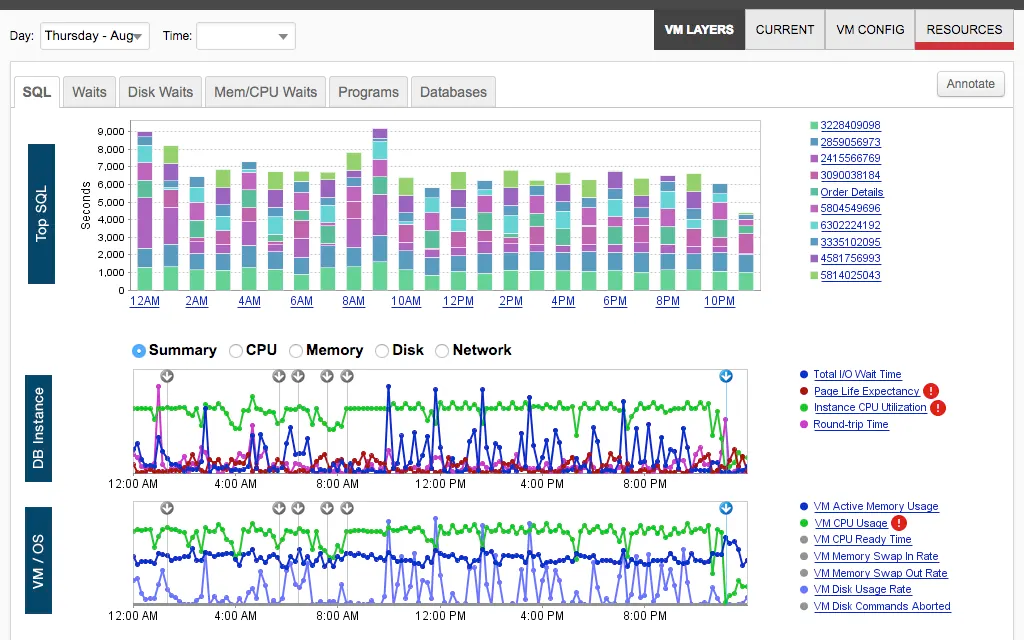

Monitoring performance metrics becomes more critical to engineering teams as databases grow in usage and volume. Implementing a database monitoring tool provides valuable metric insights without requiring manual logging or configuration changes. The image below is a screenshot from SolarWinds® Database Performance Analyzer (DPA), showing how one can easily monitor the performance of multiple databases through a single platform.

Indexing for database optimization

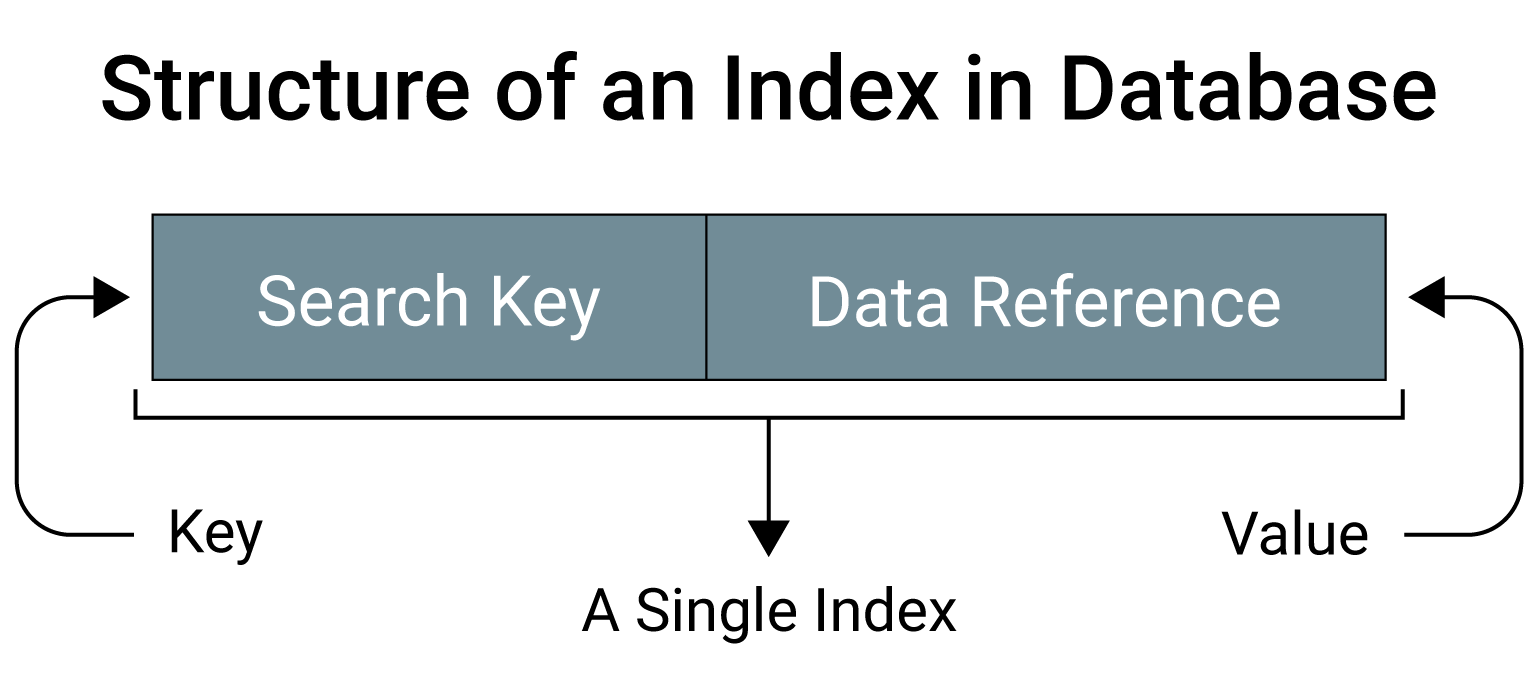

Indexes are data structures commonly added to database tables to improve retrieval speeds. These structures help database engines quickly locate specific data without having to scan the entire table. Indexes are created on one or more table columns. An index node usually comprises two main components: a search key and a data reference pointer.

The structure of a database index . (source)

The search key promotes quick retrievals, and the associated data reference points the engine to the required piece of data. The search keys are often kept in sorted order to further improve speeds; however, this is not always the case (e.g., hash indexes).

While indexes drastically reduce retrieval times, they do come at a cost. Adding indexes to a database creates the storage overhead of utilizing more disk space. Also, while read costs decrease, write costs increase. This is because when you insert, update, or delete from a table, the associated indexes must also be updated.

You can use different index types based on the kind of retrieval that needs to take place. Each type of index comes with its own benefits. The following are two popular indexes used to optimize database tables.

B-tree indexes

The B-tree index is a self-balancing organization of nodes, wherein each node contains a key and pointers to its child nodes. This index is arguably the most widely used in database engineering. It is more geared toward single-value or range-value queries. This makes finding a particular value in each range fairly simple. Relational database management systems (RDBMSs) typically use B-tree indexes when using the CREATE INDEX keywords, so no extra declaration is required.

As an example, let’s assume we have created a products table using PostgreSQL for an e-commerce website:

CREATE TABLE Products (

ProductID SERIAL PRIMARY KEY,

ProductName VARCHAR(100),

CategoryName VARCHAR(50),

Price DECIMAL(10, 2),

CreatedAt TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

Suppose the business analytics team were frequently filtering products based on category and price for sales research purposes. It would be a sensible decision to create an index on these two fields:

CREATE INDEX idx_category_price ON Products (CategoryName, Price);

A range-based query, like the following, would now have a faster response time:

SELECT * FROM ProductsWHERE CategoryName = 'Electronics' AND Price BETWEEN 15 AND 30;

Hash indexes

Hash indexes work on the concept of hash tables. They’re found on PostgreSQL, Oracle®, and MySQL® (only when using the InnoDB® engine) databases. They hold key-index pairs that are helpful in the case of exact-match queries. Hash indexes have two major components:

- Hash functions: They convert a data-associated key to an index. In the case of database indexing, we usually pick a hash function with a relatively low collision rate, as we ideally do not want two pieces of data to have the same index position.

- Hashing schemes: A hashing scheme is an additional function that provides accurate data in the case of a collision.

Hash indexes are generally only useful in the case of equality matches (==) and should not be used in the case of range queries. Those are best left for B-tree lookups. This is because, in hash indexes, there is no ordering of keys, and a range search would imply searching every row of the table.

Let’s once again look at our previous example of an e-commerce website’s PostgreSQL product table:

CREATE TABLE Products (

ProductID SERIAL PRIMARY KEY,

ProductName VARCHAR(100),

CategoryName VARCHAR(50),

Price DECIMAL(10, 2),

CreatedAt TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

Let’s assume that this time, the business analytics team is frequently searching for a specific product daily. This would be a good time to add a hash index to our table:

CREATE INDEX idx_product_name_hash ON Products USING HASH (ProductName);

Note the use of the USING HASH keywords to tell the RDBMS that this index needs to be hashed.

Now, a search for a specific product, such as “cup,” would be faster:

SELECT * FROM Products

WHERE ProductName = 'cup';

When working with hash indexes, users should check if they are available within the DBMS they are using. They are present in PostgreSQL, Oracle, and MySQL (only InnoDB).

Unique Considerations for Hashes in Microsoft SQL Server

Microsoft SQL Server® handles hash indexing in a completely unique way. Instead of requiring the manual creation of a hash index, as the other relational databases do, SQL Server makes a decision during query processing as to whether a hashing algorithm offers the best performance. There are three typical conditions for using a hashing algorithm:

- During a join operation

- When the two tables referenced in the join are considerably large, typically many millions of rows

- When the existing keys on the tables are unsorted, nonindexed, or otherwise suboptimal

Other factors can also influence the query optimizer’s use of a hash join algorithm, including query complexity, data size, available memory, and available CPUs to process the query. Once the query optimizer chooses a hash join, SQL Server constructs the hash in memory, assuming there is enough available memory for the process, or even “spills” the hash to a structure in tempDB. In effect, it creates a hash index ad hoc. However, there is no manual method to create a hash index in advance of the query execution process.

Database query optimization

Query optimization is one of the most effective ways to improve the performance of a database. It involves examining and revising a query to ensure it interacts efficiently with a database system. This often entails creating more than one version of a given SELECT statement and then comparing them to determine which one performs the best. The primary goal of optimizing a query is to reduce the total response time by minimizing the processing time required by the database engine to execute the query. You can also rewrite queries to reduce CPU utilization, memory consumption, user concurrency, or file I/O, which can significantly impact performance.

Apart from reducing execution times, in the case of remote or cloud databases, efficient queries can also bring down transactional costs since cloud users pay according to the resources they consume. For example, a well-optimized query that scans only part of a table involves fewer computational resources, leading to lower costs, as opposed to a query that unnecessarily scans an entire table.

Query execution plans

The first step in optimizing a query is to understand its current efficiency state. Query execution plans are maps explaining the sequential operators used by a DBMS to execute a particular query. They break down a query into incremental steps, providing data professionals with insights into its efficiency. Most RDBMSs allow users to obtain query execution plans easily.

Let’s look at an example of utilizing a query execution plan in PostgreSQL to optimize a query:

EXPLAIN ANALYZE

SELECT clients.full_name, purchases.purchase_date, items.item_name

FROM clients

JOIN purchases ON clients.id = purchases.client_id

JOIN purchase_items ON purchases.id = purchase_items.purchase_id

JOIN items ON purchase_items.item_id = items.id

WHERE clients.region = 'North America';

The above query retrieves a set of clients from a database based on their region and purchases. The EXPLAIN ANALYZE keywords before the query provide us with the execution plan while executing the query.

The output would be as follows:

QUERY PLAN

----------------------------------------------------------------------------------------------------

Nested Loop (cost=1.50..9.00 rows=3 width=65) (actual time=0.050..0.070 rows=3 loops=1)

-> Seq Scan on clients (cost=0.00..4.50 rows=1 width=29) (actual time=0.020..0.025 rows=1 loops=1)

Filter: (region = 'North America'::text)

-> Nested Loop (cost=1.00..4.50 rows=3 width=36) (actual time=0.020..0.045 rows=3 loops=1)

-> Seq Scan on purchases (cost=0.00..2.50 rows=2 width=16) (actual time=0.005..0.010 rows=2 loops=1)

Filter: (client_id = clients.id)

-> Seq Scan on purchase_items (cost=0.00..1.50 rows=2 width=20) (actual time=0.002..0.005 rows=2 loops=2)

Filter: (purchase_id = purchases.id)

-> Seq Scan on items (cost=0.00..3.61 rows=1 width=31) (actual time=0.006..0.006 rows=1 loops=2)

Filter: (id = purchase_items.item_id)

Planning Time: 0.250 ms

Execution Time: 0.090 ms

(14 rows)

We notice a Seq Scan taking place on all tables. This can become a bottleneck, especially with large amounts of data, as it implies scanning the whole table. It would be intuitive to replace the sequential scan on the clients table with an index scan:

CREATE INDEX idx_clients_region ON clients (region);

On requesting a query execution plan again, using our original query, we would see the following:

QUERY PLAN

----------------------------------------------------------------------------------------------------

Nested Loop (cost=1.20..6.80 rows=3 width=65) (actual time=0.030..0.045 rows=3 loops=1)

-> Index Scan using idx_clients_region on clients (cost=0.42..2.50 rows=1 width=29) (actual time=0.015..0.020 rows=1 loops=1)

Index Cond: (region = 'North America'::text)

-> Nested Loop (cost=0.87..4.30 rows=3 width=36) (actual time=0.012..0.025 rows=3 loops=1)

-> Seq Scan on purchases (cost=0.00..2.50 rows=2 width=16) (actual time=0.005..0.008 rows=2 loops=1)

Filter: (client_id = clients.id)

-> Seq Scan on purchase_items (cost=0.00..1.50 rows=2 width=20) (actual time=0.002..0.004 rows=2 loops=2)

Filter: (purchase_id = purchases.id)

-> Seq Scan on items (cost=0.00..3.61 rows=1 width=31) (actual time=0.005..0.005 rows=1 loops=2)

Filter: (id = purchase_items.item_id)

Planning Time: 0.200 ms

Execution Time: 0.055 ms

(14 rows)

Adding an index to the clients table shows the planning time has been reduced by 0.05 milliseconds (ms), which amounts to 20%, and the execution time by 0.035 ms (~39%). As it is a simple query, execution times are already fast; however, more complex queries dealing with large volumes of data can be heavily optimized and fine-tuned with the help of query execution plans.

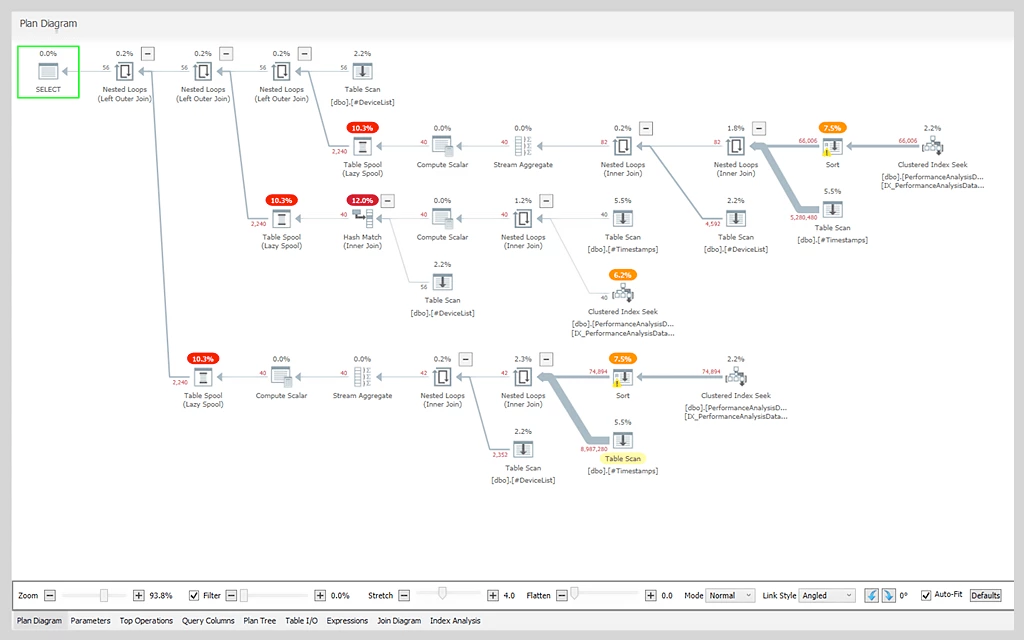

Monitoring tools can also provide users with graphic execution plans, which can sometimes be easier to interpret. Graphic plans typically include annotations, operator costs, and resource utilization graphs. The screenshot below is a plan diagram from SolarWinds Plan Explorer®, a query analysis tool.

Plan Explorer uses intuitive visuals and display options to inform users about the performance of their query.

Optimizing JOINs

Joins in SQL are operations used to combine rows from multiple tables into a single result set. Joins allow users to collate data from multiple tables, making it easier to work with. Optimizing joins can help prevent the unnecessary use of resources and computation of more data than is required.

A simple way to ensure joins are less resource intensive, particularly with large datasets, is to filter the data early. Using the WHERE clause when joining tables to select only the required data can reduce the burden on the database engine. Below, we join the customers and orders tables, but only for orders placed on or after January 1, 2024.

SELECT c.customer_name, o.order_id, o.amount

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

WHERE o.order_date >= '2024-01-01';

The WHERE clause here ensures we don’t join unnecessary rows and scan only the data we need.

Efficient WHERE statements

The WHERE clause is often referred to as a “search argument,” or sarg. Often, WHERE clauses can filter more data than is required. By implementing a few good practices, you can prevent these statements from becoming inefficient.

- Ensure WHERE clauses don’t negate the use of indexes when used with functions. For example, the following statement would prevent the engine’s query optimizer from using an index on order_date due to the YEAR() function.

SELECT * FROM orders WHERE YEAR(order_date) = 2023;

- Use a range-based WHERE clause to make use of any index present.

SELECT * FROM orders

WHERE order_date >= '2023-01-01' AND order_date < '2024-01-01';

- Using EXISTS instead of IN when dealing with subqueries.

SELECT *

FROM clients c

WHERE EXISTS (SELECT 1 FROM purchases p WHERE p.client_id = c.id);

In the above scenario, EXISTS stops the engine from processing further once a single match is found. This method uses less memory and is faster than the following:

SELECT * FROM clients WHERE id IN (SELECT client_id FROM purchases);

However, IN looks for all matches in clients and is, therefore, less efficient.

Database normalization

Normalization is the process of restructuring a relational database to improve data retrieval ease and ultimately increase performance. It works on the principle of simplifying tables into smaller collections while ensuring that accuracy and integrity remain identical. Normalization becomes particularly useful as data volumes become significantly large and monolithic.

The benefits of normalizing a database are:

- Optimized storage space by reducing redundancy

- Faster access to data due to smaller tables

- More effortless scalability and modification without disrupting data integrity

- Reduced costs to write to a table

- Decreased possibility of logical errors in INSERT, UPDATE, and DELETE statements

- Clearly defining the relationships between tables to explain parent-child relationships in the data using primary and foreign keys

Normalization can be quantified into various forms based on how compartmentalized the original data becomes by adhering to levels known as normal forms (NFs). Let’s look at a simple example table (client_orders) and how we can normalize this table into various forms:

client_id | client_name | orders | product_id | product_name |

1 | Alice | Order1, Order2 | 101, 102 | Book, Pen |

2 | Bob | Order3 | 103 | Vinyl |

3 | Charlie | Order4, Order5 | 101, 104 | Book, Shirt |

First normal form

The first normal form (1NF) aims to reduce repetition and ensure each record is unique. Our example, normalized to the first form, would look like this:

client_id | client_name | order | product_id | product_name |

1 | Alice | Order1 | 101 | Book |

1 | Alice | Order2 | 102 | Pen |

2 | Bob | Order3 | 103 | Vinyl |

3 | Charlie | Order4 | 101 | Book |

3 | Charlie | Order5 | 104 | Shirt |

The benefits of 1NF include the following:

- Single values in every column.

- Reduced logical data anomalies. For example, in the original table, if we delete the record for Order3, all data for Bob, the customer, and Vinyl , the product, will be removed. The data, although essential, will no longer exist.

Second normal form

The second normal form (2NF) is intended to reduce redundant data by getting rid of duplicates. In this example, it would entail simplifying our original table into four:

clients table

client_id | client_name |

1 | Alice |

2 | Bob |

3 | Charlie |

orders table

order_id | client_id |

Order1 | 1 |

Order2 | 1 |

Order3 | 2 |

Order4 | 3 |

Order5 | 3 |

order_details table

order_id | product_id |

Order1 | 101 |

Order1 | 102 |

Order2 | 101 |

Order2 | 102 |

Order3 | 103 |

Order4 | 101 |

Order4 | 104 |

Order5 | 101 |

Order5 | 104 |

products table

product_id | product_name |

101 | Book |

102 | Pen |

103 | Vinyl |

104 | Shirt |

The optimization benefits of 2NF are:

- Write operations (such as changing product_name) are faster and easier.

- Data redundancy is reduced due to further simplification.

Third normal form

The third normal form (3NF) aims to remove transitive dependencies, ensuring the nonkey columns of a table depend only on the primary key and not on any other columns. The main focus is on the key, the whole key, and nothing but the key. In our example, if we were to add a product price column to the order_details table, it would be against the rules of 3NF, as price is not dependent on the order_id key. It would be more appropriate to add it to our products table:

product_id | product_name | price |

101 | Book | 10.00 |

102 | Pen | 2.00 |

103 | Vinyl | 20.00 |

104 | Shirt | 15.00 |

The most significant benefit of 3NF is that it reduces the chances of inaccuracy and incorrectly positioned data, which promotes long-term optimization gains.

Further normalization

Beyond 3NF, there are three more degrees of normalization that teams can implement depending on the level of decomposition needed:

- BCNF (Boyce-Codd normal form): Builds on 3NF and aims at removing any remaining partial dependencies

- Fourth normal form (4NF): This removes multi-valued dependencies from any table, ensuring no column depends on unrelated values

- Fifth normal form (5NF): The highest level of database normalization, aimed at decomposing tables to entirely remove join dependencies

Caching

Caching, in the context of database systems, is the technique used to temporarily store frequently accessed data with the intention of reducing numerous read- operations and decreasing query response times. Caching works most effectively in read-heavy applications such as social media feeds. The most significant incentives for using caching when managing database systems are:

- Lessening the burden on the database by reducing repeated read calls

- Reducing expensive network operations in the case of remote servers

- Speeding up response time for in-memory stored information

Most popular database systems have external cache plugins available for basic query result caching (storing the output of commonly used single queries in memory). For example:

- PostgreSQL: pgpool-II is a connection pooler that reads statement query caching for PostgreSQL databases.

- MySQL: The query cache feature has been deprecated in MySQL 5.7.20 and removed as of MySQL 8.0; however, platforms such as Redis can provide databases with in-memory query caching abilities.

- Oracle: A native result cache is provided, which exists either in memory (System Global Area) or client application memory.

Caching strategies for database optimization

Database architects usually decide on a caching strategy based on the nature of their database’s function. Caching strategies determine:

- How often data is to be cached

- When data needs to be invalidated

- Which data provides the most incentive to be cached

Some common caching strategies are:

- Cache-aside: The cache exists adjacent to the database and is queried first when an application requests data. If the specific data does not exist in the cache, then the database is queried. This strategy is particularly useful in read-heavy applications. However, care must be taken to ensure the cache is not stale and a disparity with the database does not exist for too long. Writing in this strategy takes place directly on the database.

- Read-through: This strategy is similar to cache-aside. The application tries to retrieve data from the cache. If there is no successful hit, the cache retrieves the data from the database and stores it. The cache then returns the data to the application as requested. Read-through caches are also integral to read-heavy applications, but are more commonly used in cases where data freshness is critical. Like cache-aside, writing takes place directly on the database.

- Write-through: The benefit here is any new data will be present in the cache, and a read operation will be completed with a lower latency. When an application wants to write data, it first writes to the cache, then the cache writes to the database. Pairing a write-through and read-through cache is usually considerably effective in maintaining data consistency.

Event | Cache-aside | Read-through | Write-through |

Cache miss | Application loads data into cache from the database | Cache loads data from the database | Cache loads data from the database |

Write behavior | Application writes to the database | Application writes to the database | Cache writes to the database |

Best applications | User sessions, product catalogs | Search engines, pricing markets | Financial transactions, online orders |

Hardware and configuration changes for database optimization

Upgrading a database's hardware and physical infrastructure can be a highly effective way to optimize and scale it. While making script and table structure optimizations can positively influence performance, dealing with high traffic and large data volumes is usually addressed by improving a database's hardware.

Some reasons why companies would consider upgrading their database hardware are as follows:

- Increased traffic as the business grows

- Increasing data volumes

- Geographic user base expansion

- Increased need to avoid single-point-of-failure risk

Hardware upgrades

Organizations can take several different approaches to upgrading their database hardware setups. Often, improved performance comes with a balanced combination of such modifications. Some areas of improvement are:

- CPU cores: Multi-threaded environments benefit heavily from the addition of multi-core processors. A single CPU core processor could take up to 1 second to execute 10 medium-complexity queries using a 1000-row database. Assuming perfect parallelism, a quad-core processor would execute the same in approximately 250 ms, and an eight-core processor would take 125 ms. However, it should be noted that linear scaling decreases with the addition of CPU cores and provides diminishing returns in terms of performance at some point. Therefore, the optimal number of CPU cores should be analyzed before scaling.

- RAM: Increased RAM improves database caching performance, promoting better query response times. If the RAM size is insufficient for a database system, then other hardware or software upgrades typically don’t offer much in terms of performance. There is no rule of thumb for the ratio of RAM size to data set size; however, 20% tends to be a good starting point for traditional applications with data sizes upwards of 1 terabyte. Applications dealing with lower sizes (hundreds of GBs) commonly use 50% or more. This number could change depending on how actively the application reads the database.

- Network bandwidth: Cloud-based databases show improved performance with increased network bandwidth. Larger bandwidth usually means lower latency, which decreases response times. Remote databases, such as AWS Aurora®, are designed to scale network bandwidth based on moving throughput requirements.

Clustering

Clustering is the process of reorganizing multiple database servers to work together as nodes, share workloads, and improve performance based on strategic allocation. Clustering enables larger user bases and better fault tolerance. There are four primary forms of clustering:

- Active-active clustering: All nodes perform similarly, with each one responsible for read/write operations. This form of clustering works best to avoid failures. If one node fails, others can take over its load.

- Active-passive clustering: A primary node handles all traffic while other nodes remain on standby in case of failure. This form of clustering can be highly effective when used with PostgreSQL, thanks to its Streaming Replication function, which transfers data from a primary node to a secondary node in real time.

- Shared-nothing clustering: Every node has its own physical presence in the form of RAM, CPUs, and storage. A shared network connects them, and traffic is shared by sharding the load. This is commonly used in large distributed systems.

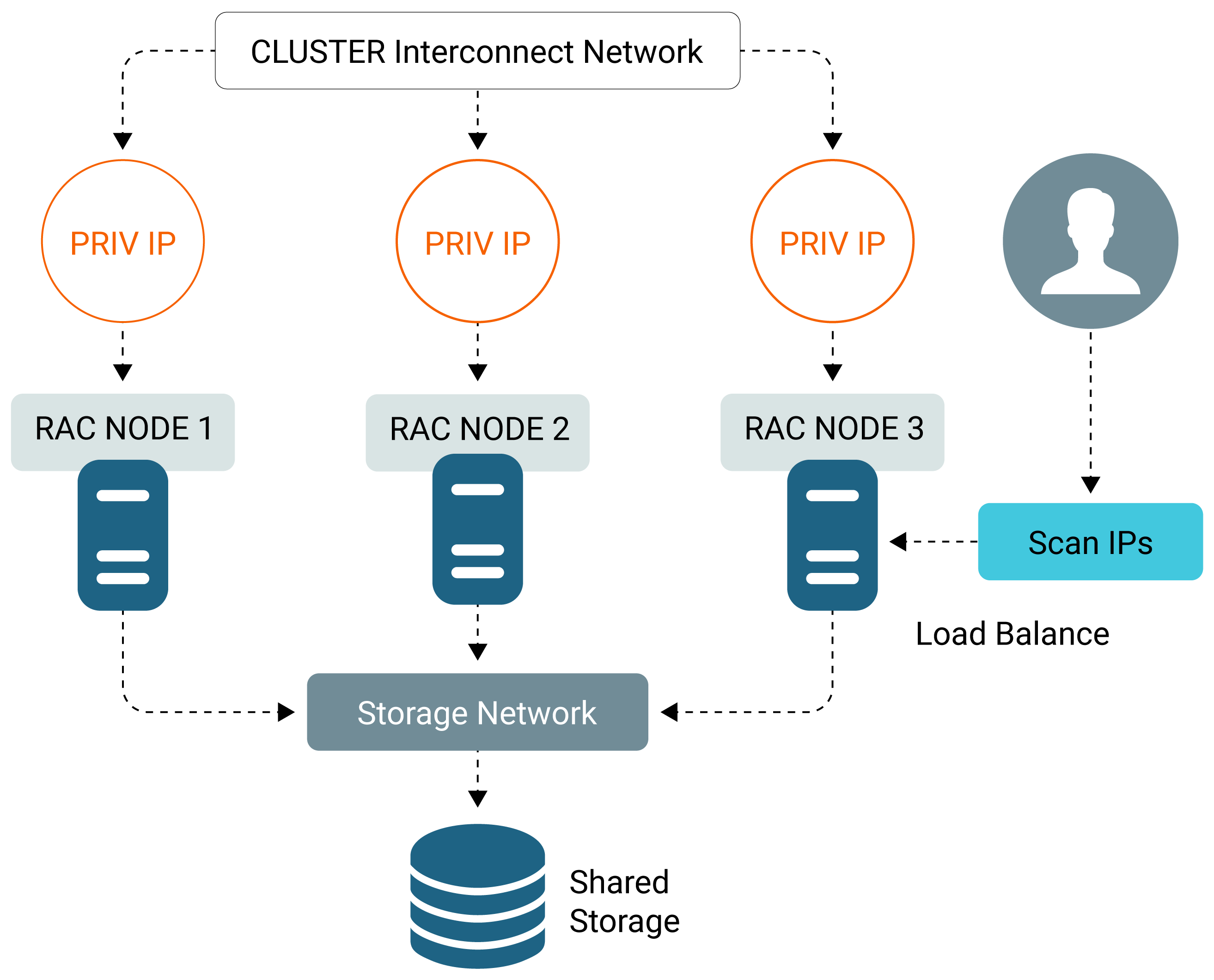

Shared-disk clustering: While every node has its own RAM and CPUs, storage is centralized. Applications built using Oracle Real Application Clusters follow this method of clustering.

Oracle Real Application Clusters shared-disk clustering. (Source)

Database monitoring

Monitoring database performance is one of the most effective ways to optimize your systems incrementally based on real-world data. Implementing monitoring systems helps engineers visualize their queries' performance, identify bottlenecks, and gauge the system's health. Most database monitoring tools allow users to investigate all the metrics mentioned earlier in this article.

DPA allows you to monitor multiple local and remote database instances on a single platform, with a focus on SQL optimization. Users who integrate DPA into their DBMS can easily take advantage of:

- Combinative management of various SQL database instances, such as PostgreSQL, SQL Server, MySQL, and Oracle, from one point of control

- Visibility into performance bottlenecks and areas for optimization by identifying top query, table, and index opportunities with relative ease

- Correlation with observability data and automation methods via REST APIs

DPA allows users to gain insights into all their SQL instances.

DPA does not require additional agent installations, making integration with your project straightforward.

Conclusion

Database optimization is one of the most critical aspects of managing and developing a database system. Engineers must consider various techniques to improve performance, ranging from query optimization to hardware upgrades. Ineffective database management can lead to prolonged waiting times and ultimately degrade the user experience. Monitoring tools, such as SolarWinds Database Performance Analyzer, can significantly reduce the burden of analyzing numerous performance metrics.