The Key Differences Between Normalizing and Denormalizing Databases

Both normalization and denormalization are crucial database design techniques, each serving different functions depending on the application's objectives and workload patterns.

Normalization is a structured, rule-based process that uses increasingly rigorous forms, known as normal forms (NFs), to arrange data into clearly defined and connected tables. Its main objectives are to reduce redundancy, improve data integrity, and guarantee system-wide consistency.

Denormalization involves reversing or relaxing higher levels of normalization; transforming a database from fifth normal form (5NF) down to second normal form (2NF) is an example. Denormalization introduces some controlled redundancy by combining related data into fewer tables. In many cases, denormalization is used to by reducing the number of joins needed.

Denormalization usually makes queries run faster and much easier to write. The main idea is to avoid using complex joins. On the other hand, normalization distributes data across several related tables, aiming to increase consistency and reduce anomalies. Performance, storage, and maintainability are all trade-offs that must be considered when selecting an appropriate strategy.

In this article, we look at the following topics:

- Definitions and goals of normalization and denormalization

- Practical implementation techniques for both approaches

- Their effects on performance, storage, data integrity, and redundancy

Understanding these strategies will allow you to be better prepared to choose the right approach, or a combination of both, that best fits your application's functional and performance needs.

Summary of key database normalization and denormalization concepts

Concept | Normalization | Denormalization |

Definition and goals | Organizes data into smaller, related tables to reduce redundancy, explicitly declare relationships, and help ensure data integrity. | Combines tables and introduces multiple copies of the same data in the interest of improved read performance and to speed up queries. |

Implementation techniques | Uses 1NF, 2NF, 3NF, and higher forms, such as Boyce-Codd normal form (BCNF), 4NF, and 5NF, with referential integrity constraints, including foreign keys. | Introduces data duplication, summary tables, materialized views, and pre-aggregated data. |

Query performance and complexity | Requires multiple joins, increasing SQL query complexity and potentially slowing down query performance. | Needs fewer joins, improving query performance in read-heavy applications, especially when combined with summary tables and pre-aggregated data. |

Data redundancy and storage efficiency | Reduces redundancy, optimizing storage efficiency through better data type selection and minimizing data inconsistencies. | Increases redundancy, leading to higher storage requirements but optimizing for read-heavy queries. |

Data integrity and maintenance | Maintains data integrity and reduces data writing anomalies by enforcing NFs and minimizing redundancy, making maintenance easier. Still requires maintenance to regularly update index statistics and occasionally defragment data. | Requires the same kind of maintenance on tables and indexes as a normalized database. Requires additional maintenance for big data aggregations, such as Delta Store upkeep on a columnstore index in Microsoft SQL Server®. |

Write performance and scalability | Faster writes with less data duplication and is more scalable. Usually features short and brief transactions, thus enabling better transaction log throughput. | Slower writes due to the architectural design required in large data warehouse applications, the most common type of denormalized database. In most cases, data warehouses loaded with data regularly using extract, transform, load (ETL) processes instead of short and brief data entry transactions. |

Use cases | Best suited for transactional systems requiring data integrity (e.g., banking and inventory management). | Best suited for read-heavy systems, such as data warehouses, reporting tools, and business intelligence (BI) applications. Also used in online transaction processing (OLTP) systems, requiring speed at the expense of redundant data sets. |

Cloud environment considerations | Used in transactional applications; well suited for Amazon RDS®, Microsoft Azure SQL Database®, or Google Cloud Platform® (GCP). | Used in data warehousing, data lakehouse, and analytic applications; ideal for Google BigQuery®, Amazon Redshift®, or Microsoft Azure Synapse Analytics®. |

Definitions and goals

Normalization

Normalization is a database design technique that organizes data into smaller, related tables. The goal is to reduce duplication, clearly define relationships among tables, and improve consistency by following a set of guidelines known as NFs. NFs help to ensure each table focuses on a single subject and data dependencies are logical and efficient.

Normalization allows designers to break large, flat data structures into smaller, more manageable pieces, with each table representing a specific entity or concept. These tables are connected using keys, which help preserve data integrity and make it easier to retrieve information accurately and flexibly.

The main goal of normalization is to reduce insertion, update, and deletion anomalies that can compromise the accuracy and reliability of the database. By organizing data to avoid unnecessary repetition, normalization reduces problems, such as update errors or missing information, when inserting or deleting records. It is especially useful in systems where accuracy is critical, such as those used in banking or for order processing, as it keeps everything consistent and easy to manage.

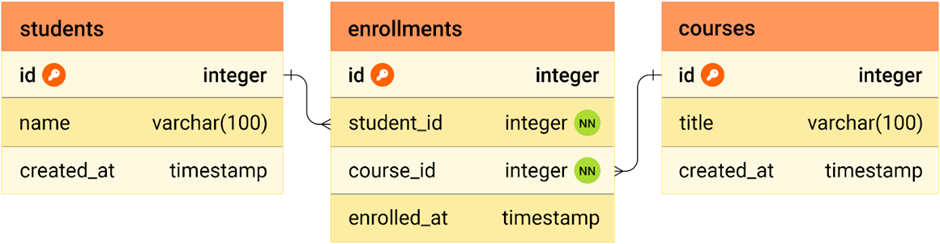

An entity-relationship diagram (ERD) illustrates how a normalized database organizes data into multiple related tables—such as students, courses, and enrollments. This minimizes redundancy and highlights the relationships among entities.

Diagram generated with the dbdiagram.io tool.

Denormalization

Denormalization is a database design method where tables that have already been organized into higher NFs are merged. This technique reintroduces redundancy to simplify complexity and enhance read performance.

Through merging tables and reducing the need for multiple joins, denormalization can accelerate query speed, particularly in scenarios where quick data retrieval is essential. This is especially valuable for read-heavy applications and BI systems, where fast data analysis is a priority. In these cases, data may be aggregated into summary records, making it easier to retrieve large volumes of data with fewer computational resources.

Denormalization is mainly about making queries faster. Simplifying the database structure and reducing how much work a query has to do speeds up data retrieval. This is especially helpful in systems where quick access to information is essential, such as dashboards or reports used for decision-making.

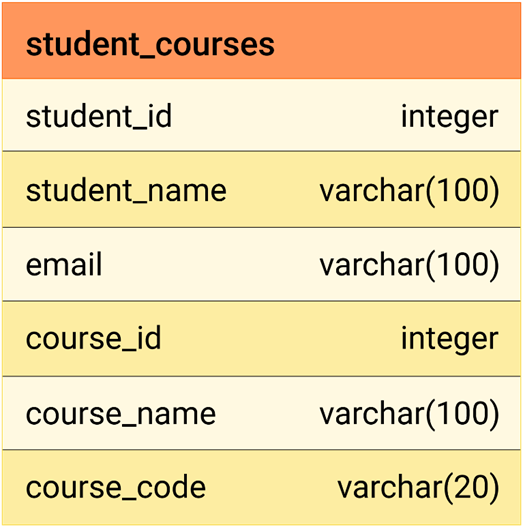

Tables are combined in a denormalized schema to reduce the need for joins, thereby improving read performance. In this instance, as the diagram below shows, it results in a single table that contains both student data and their course enrollments. In this example, we can see that course_name is repeated multiple times. In contrast, a normalized database would have the table with the name listed once in separate tables, as we saw in the previous example.

ERD showing a denormalized schema with combined student and enrollment data, repeating course details for faster reads.

Normalization implementation

This section looks at how normalization is applied in database design. The goal of normalization is to reduce redundancy and limit dependencies, which is done by splitting large tables into smaller ones. Each of these smaller tables has a clear purpose and defined relationships with others. When inserting, updating, or deleting data, this setup helps avoid common anomalies.

The process is guided by a series of rules known as NFs. In this article, we focus on the key NFs relevant to most practical implementations: 1NF, 2NF, 3NF, BCNF, 4NF, and 5NF.

The following schema represents an example of a non-normalized design, where multiple course-related details are stored within a single table. It repeats data for each student’s course enrollment, leading to potential redundancy and inefficiency. We will use this in our examples to demonstrate normalization.

CREATE TABLE online_learning_info (

student_id INT PRIMARY KEY,

student_name VARCHAR(100),

course_id1 VARCHAR(20),

course_name1 VARCHAR(100),

instructor_name1 VARCHAR(100),

instructor_email2 VARCHAR(100),

course_certified1 BOOLEAN,

course_id2 VARCHAR(20),

course_name2 VARCHAR(100),

instructor_name2 VARCHAR(100),

instructor_email2 VARCHAR(100),

course_certified2 BOOLEAN

);

First normal form

1NF organizes data into tables where each column contains only atomic values. This means each field holds only a single value, and there are no repeating groups or arrays. This helps maintain a consistent structure and eliminate redundancy caused by multiple values in a single column.

In this 1NF design, the data is split into two tables: one for students and another for course enrollment. This eliminates repeating groups and multi-valued columns, with each course enrollment stored in a separate row for each student. Each column contains atomic values, and relationships between students and courses are maintained through a foreign key.

CREATE TABLE students (

student_id INT PRIMARY KEY,

student_name VARCHAR(100)

);

CREATE TABLE course_enrollment (

student_id INT,

course_id VARCHAR(20),

course_name VARCHAR(100),

instructor_name VARCHAR(100),

instructor_email VARCHAR(100),

course_certified BOOLEAN,

PRIMARY KEY (student_id, course_id),

FOREIGN KEY (student_id) REFERENCES students(student_id)

);

Second normal form

To achieve 2NF, the table must first be in 1NF, and all nonkey attributes must be fully dependent on the entire primary key. This step addresses partial dependencies, where a nonkey attribute depends only on part of a composite primary key.

To move a table into 2NF, you need to remove partial dependencies, which means splitting the data into smaller tables. Each new table should have a primary key that uniquely identifies its records. All other columns in the table should depend entirely on that key. Doing this helps reduce redundancy and keeps the data more consistent.

CREATE TABLE students (

student_id INT PRIMARY KEY,

student_name VARCHAR(100)

);

CREATE TABLE courses (

course_id VARCHAR(20) PRIMARY KEY,

course_name VARCHAR(100),

instructor_name VARCHAR(100),

instructor_email VARCHAR(100),

);

CREATE TABLE course_enrollment (

student_id INT,

course_id VARCHAR(20),

course_certified BOOLEAN,

PRIMARY KEY (student_id, course_id),

FOREIGN KEY (student_id) REFERENCES students(student_id),

FOREIGN KEY (course_id) REFERENCES courses(course_id)

);

Third normal form

To reach 3NF, a table already in 2NF has the rule added that no nonkey column can depend on another nonkey column. This type of indirect relationship is called a transitive dependency and occurs when a nonkey attribute relies on another one, which in turn relies on the primary key. This can be fixed by breaking the table into smaller ones based on how the data is related.

CREATE TABLE students (

student_id INT PRIMARY KEY,

student_name VARCHAR(100)

);

CREATE TABLE instructors (

instructor_id INT PRIMARY KEY,

instructor_name VARCHAR(100),

instructor_email VARCHAR(100)

);

CREATE TABLE courses (

course_id VARCHAR(20) PRIMARY KEY,

course_name VARCHAR(100),

instructor_id INT,

FOREIGN KEY (instructor_id) REFERENCES instructors(instructor_id)

);

CREATE TABLE course_enrollment (

student_id INT,

course_id VARCHAR(20),

course_certified BOOLEAN,

PRIMARY KEY (student_id, course_id),

FOREIGN KEY (student_id) REFERENCES students(student_id),

FOREIGN KEY (course_id) REFERENCES courses(course_id)

);

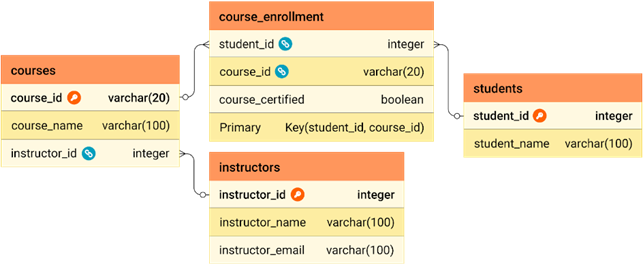

Here, the transitive dependency was removed by moving instructor_email to the instructors table and linking instructor_name through instructor_id. The courses table now stores only instructor_id, eliminating redundancy. All nonkey attributes are now directly dependent on their primary keys, satisfying 3NF.

Below is the ERD representing the relationships between students, courses, instructors, and course enrollments in the online learning platform.

ERD showing relationships between students, courses, instructors, and enrollments in an online learning platform.

Boyce-Codd normal form

BCNF is a stricter version of 3NF that eliminates the anomalies caused by functional dependencies where a noncandidate key acts as a determinant. While 3NF handles most redundancies, it allows situations where a noncandidate key determines another attribute. BCNF helps ensure every determinant is a candidate key, removing these remaining anomalies.

Consider a university course scheduling scenario where a table records which course is held in which room and at what time.

CREATE TABLE course_offering (

course_id VARCHAR(20),

time_slot VARCHAR(20),

room_number VARCHAR(10),

PRIMARY KEY (course_id, time_slot)

);

Now, assume each room is available at only one specific time slot, creating a dependency where the room number determines the time slot. However, in the current table, room_number is not a candidate key because the primary key is the combination of course_id and time_slot. This violates BCNF because a noncandidate key determines another attribute. To resolve this and satisfy BCNF, the table must be decomposed to isolate the dependency: one table should map each room_number to a single time_slot, and another should associate each course_id with a room_number using a foreign key.

CREATE TABLE room_schedule (

room_number VARCHAR(10) PRIMARY KEY,

time_slot VARCHAR(20)

);

CREATE TABLE course_offering (

course_id VARCHAR(20) PRIMARY KEY,

room_number VARCHAR(10),

FOREIGN KEY (room_number) REFERENCES room_schedule(room_number)

);

This helps ensure all determinants are candidate keys, removing redundancy and maintaining data consistency.

Fourth normal form

4NF eliminates multi-valued dependencies by storing independent attributes in separate tables. When multiple independent values exist for a single key, they should be divided into distinct tables to maintain data integrity.

Consider a table that tracks students and their skills.

CREATE TABLE student_skills (

student_id INT,

student_name VARCHAR(100),

skill VARCHAR(100),

language VARCHAR(100)

);

In this table, a student can have multiple skills and speak several languages, creating a multi-valued dependency. Both skill and language depend on student_id, but they are independent of each other. To bring this into 4NF, the table should be split into two tables: one for student skills and another for student languages.

CREATE TABLE student_skills (

student_id INT,

skill VARCHAR(100),

PRIMARY KEY (student_id, skill)

);

CREATE TABLE student_languages (

student_id INT,

language VARCHAR(100),

PRIMARY KEY (student_id, language)

);

This helps ensure each independent set of values is stored separately, eliminating the multi-valued dependency.

Fifth normal form

5NF helps to decompose data into smaller tables only when it's necessary to eliminate redundancy, while preserving the ability to reconstruct the original data through lossless joins. This form is typically applied to complex many-to-many relationships that can be further broken down.

For example, consider a scenario where a table stores information about courses, students, and instructors.

CREATE TABLE course_instructor_student (

course_id INT,

instructor_id INT,

student_id INT,

PRIMARY KEY (course_id, instructor_id, student_id)

);

In this table, a many-to-many relationship exists between courses, instructors, and students. The table can be further decomposed into smaller tables to avoid redundancy. To bring this into 5NF, the table should be split into three tables: one for course-student relationships, another for course-instructor relationships, and a third for student-instructor relationships.

CREATE TABLE course_student (

course_id INT,

student_id INT,

PRIMARY KEY (course_id, student_id)

);

CREATE TABLE course_instructor (

course_id INT,

instructor_id INT,

PRIMARY KEY (course_id, instructor_id)

);

CREATE TABLE student_instructor (

student_id INT,

instructor_id INT,

PRIMARY KEY (student_id, instructor_id)

);

Denormalization implementation

In this section, we will explore some key techniques for implementing denormalization, including data duplication, summary tables, materialized views, and pre-aggregated data. These techniques are particularly useful for optimizing systems with complex queries and large volumes of data.

Data duplication across multiple tables

Allowing some data to be duplicated across tables can reduce the need for joins, which usually leads to faster queries. For example, when a customer places multiple orders, address information can be stored directly on the orders table. Then there would be no need to join the orders table with the customers table every time order details are retrieved.

Consider an e-commerce platform where customer addresses are stored in a customers table:

CREATE TABLE customers (

customer_id INT PRIMARY KEY,

customer_name VARCHAR(100),

customer_address VARCHAR(255),

);

Instead of joining this table with the orders table every time to fetch customer details, the customer's address could be duplicated directly in the orders table. This helps retrieve order details faster, as it reduces the need for repeated joins.

CREATE TABLE orders (

order_id INT PRIMARY KEY,

customer_id INT,

order_date DATE,

customer_address VARCHAR(255), -- data duplication

FOREIGN KEY (customer_id) REFERENCES customers(customer_id)

);

Using summary tables

Summary tables are precomputed and store aggregate data (such as totals or averages) to speed up read-heavy queries. These tables reduce the complexity of calculating aggregates during runtime.

Consider a sales database that stores detailed transaction data. Rather than recalculating total sales with each query, a summary table can store pre-aggregated data. This allows queries, such as finding total sales per product, to run more quickly, since the calculations have already been done in advance.

CREATE TABLE sales_summary (

product_id INT,

total_sales DECIMAL(10,2),

total_quantity INT,

PRIMARY KEY (product_id)

);

Materialized views

Materialized views are precomputed views that store query results physically. They are typically used in complex reporting scenarios where the query execution involves multiple tables and joins. Materialized views store the result set and can be refreshed periodically, unlike regular views. Materialized views are known as indexed views on SQL Server.

A materialized view can be used to store the results of a complex join, such as an aggregation between sales and products.

CREATE MATERIALIZED VIEW sales_report AS

SELECT p.product_name, SUM(s.sales_amount) AS total_sales

FROM sales s

JOIN products p ON s.product_id = p.product_id

GROUP BY p.product_name;

When querying sales_report, the system doesn’t need to recompute the aggregation, making reporting faster. Materialized views can be refreshed on a schedule to help ensure the data remains up to date. They are commonly used in Oracle® and PostgreSQL® and are less frequently implemented in SQL Server.

Pre-aggregated data

Pre-aggregating data before it is stored can save time during query execution. This technique is especially useful when queries require aggregated data over large data sets, such as counting, summing, or averaging values.

For example, if a retail store needs to display the total sales per month, pre-aggregated data could be stored in a monthly_sales table:

CREATE TABLE monthly_sales (

month INT,

total_sales DECIMAL(10,2),

PRIMARY KEY (month)

);

Every time a sale is made, the total_sales value for the respective month is updated in the monthly_sales table. In this case, queries that fetch the monthly sales total no longer require scanning the entire sales table, resulting in a significant improvement in query performance.

Query performance and complexity

When designing a database, one of the most significant trade-offs to consider is how normalization and denormalization affect query performance and complexity.

Normalization

A normalized database spreads data across several related tables to avoid repetition and keep the information consistent. This promotes efficient storage and data integrity, but it can often lead to more complex queries that require multiple table joins. These joins can slow down query performance, particularly as the data set grows or in scenarios with high read frequencies.

The example below, which is a typical e-commerce database, shows how normalization impacts both the structure and performance of queries. The design breaks down data into related tables, such as customers, orders, products, and order details, to avoid duplication and maintain consistency. The following schema and queries demonstrate how normalized databases manage relationships through joins, particularly when handling large volumes of data.

CREATE TABLE customers (

customer_id SERIAL PRIMARY KEY,

customer_name VARCHAR(100),

customer_email VARCHAR(100),

customer_address TEXT

);

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

customer_id INT REFERENCES customers(customer_id),

order_date DATE

);

CREATE TABLE products (

product_id SERIAL PRIMARY KEY,

product_name VARCHAR(100),

price DECIMAL(10, 2)

);

CREATE TABLE order_details (

order_detail_id SERIAL PRIMARY KEY,

order_id INT REFERENCES orders(order_id),

product_id INT REFERENCES products(product_id),

quantity INT

);

The query below retrieves the order history for a specific customer by joining multiple normalized tables: customers, orders, order_details, and products. It demonstrates the need for multiple joins to gather related data in a normalized schema.

SELECT

c.customer_name,

c.customer_address,

o.order_date,

p.product_name,

od.quantity

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

JOIN order_details od ON o.order_id = od.order_id

JOIN products p ON od.product_id = p.product_id

WHERE c.customer_id = 5;

Denormalization

Denormalized databases pull related data into fewer tables, even if it means repeating some information. This setup simplifies queries and can boost performance in read-heavy systems, since there are fewer joins to process. For instance, a denormalized table might combine customer and order details into one structure to allow faster access.

CREATE TABLE order_summary (

customer_id INT,

customer_name VARCHAR(100),

customer_email VARCHAR(100),

customer_address TEXT,

order_date DATE,

product_name VARCHAR(100),

quantity INT,

price DECIMAL(10,2)

);

The example below shows how querying this denormalized structure becomes much simpler.

SELECT customer_name, customer_address, order_date, product_name, quantity

FROM order_summary

WHERE customer_id = 5;

In a benchmark test with 10,000 customers, the normalized query—despite involving multiple JOINs across four tables—performed efficiently, with a total execution time of approximately 34 milliseconds (ms), thanks to indexed lookups on customer_id, order_id, and product_id. It scanned fewer rows in total because it navigated relational connections precisely. On the other hand, the initial denormalized query, without an index on customer_id, required a full table scan, resulting in a slower performance. It had an execution time of around 58.8 ms and scanned over 189,000 rows.

At this point, the denormalized query was optimized by adding an index on customer_id.

CREATE INDEX

idx_customer_id

ON

order_summary(customer_id);

After creating the index, the denormalized query became the fastest, being completed in only 17.2 ms, with significantly fewer rows examined. This clearly shows denormalized tables can outperform normalized ones in read-heavy scenarios, especially as the table size increases and appropriate indexing is applied. However, for smaller denormalized tables, indexes may not always be necessary to achieve optimal performance, as the overhead of indexing might not justify the gains.

Query Type | Execution Time (ms) | Rows Scanned |

Normalized (JOINs) | 34 | 18,841 |

Denormalized (no index) | 58.8 | 189,327 |

Denormalized (with index) | 17.2 | 18,841 |

Data redundancy and storage efficiency

One of the primary trade-offs between normalization and denormalization lies in how data redundancy and storage efficiency are managed.

Normalization

In a normalized , data is split into several related tables. Each piece of information is stored only once. This reduces redundancy and makes storage more efficient. As a result, the chance of inconsistencies is much lower. For example, customer information isn’t repeated with every order. In a normalized setup, it’s stored in a separate table and linked using foreign keys. This not only saves space but also helps ensure any update to customer data—e.g., an address change—propagates consistently throughout the database.

Using efficient data types is also important in normalized schemas. Choosing appropriate data types, for instance, using INT instead of VARCHAR for IDs, or DATE instead of DATETIME when time isn’t needed, further improves storage efficiency. Poor data type choices can quickly erode the storage benefits gained from normalization.

Denormalization

Denormalization, by contrast, intentionally duplicates data by combining multiple tables into fewer or a single table. While this approach increases storage requirements due to redundant data—e.g., repeating customer names and addresses with each order—it is often justified in read-heavy environments, such as data warehouses or large-scale analytics platforms. These systems prioritize query performance over storage efficiency, as they handle massive volumes of reporting and analytical queries that benefit from reduced joins and faster access paths.

Read-heavy queries in denormalized systems are common because these systems are typically used for online analytical processing (OLAP) workloads, where thousands or millions of rows are scanned for aggregations, trends, and reporting purposes. By storing data in a denormalized format, these queries can retrieve necessary information in fewer disk I/O operations, improving speed even if it means storing more redundant data.

Let’s recall the previous e-commerce application example:

- Normalized schema: A customers table stores customer data, and an orders table references customers by a foreign key. A customer's information is stored once, regardless of the number of orders they place.

- Denormalized schema: The order_summary table stores complete customer information along with each order, resulting in duplicated customer data across multiple records.

The table below compares storage usage between normalized and denormalized for handling 100,000 orders, highlighting the increased storage demand in the denormalized approach due to duplicated customer data.

Schema Type | Storage Usage |

Normalized | 7.42MB |

Denormalized | 19.55MB |

Data integrity and maintenance

One of the most important factors in database design is ensuring data stays accurate and consistent over time. Normalization and denormalization approach this in significantly different ways. Normalized databases generally make it easier to maintain data integrity, while denormalized ones introduce challenges that require extra care and planning.

Normalization

Normalized schemas help keep data clean and consistent by breaking it down into related tables and reducing repetition. This setup works especially well in systems that handle a high volume of transactions, such as OLTP systems, where data is constantly being inserted, updated, or deleted.

One of the main reasons for using normalization is to prevent data anomalies and issues that can cause inconsistencies or corrupt data if not handled properly. These problems tend to show up more often in that aren’t normalized or are poorly structured:

- Insertion anomalies: Occur when certain data cannot be added to the database without the presence of other, unrelated data. For example, in a single flat table storing both customers and orders, inserting a new customer who hasn’t placed an order might be impossible without including a placeholder order row.

- Update anomalies: Happen when the same data is stored in multiple places and must be updated consistently across all of them. For example, if a customer’s address is repeated in numerous order rows, updating the address in only some of them creates inconsistency.

- Deletion anomalies: Arise when deleting a piece of data inadvertently causes the loss of other valuable information. For instance, deleting the last order of a customer in a flat table could also remove all customer information if it is not stored separately.

Normalization addresses these issues progressively through NFs:

- 1NF: Eliminates repeating groups by ensuring each field contains only atomic (indivisible) values, turning multi-valued attributes into separate rows or tables.

- 2NF: Ensures every nonkey attribute depends on the whole primary key, and not only a part of it. This matters most in tables that use composite keys, where some columns might only depend on part of the key. In such cases, it's best to move those columns into a separate table to keep the data structure clean and consistent.

- 3NF: Takes it a step further by making sure nonkey attributes depend only on the primary key, and not on other nonkey attributes. This helps break down the data into more focused tables, reducing duplication and lowering the chances of problems when updating the data.

By organizing data into separate, interrelated tables and applying these normalization rules, a database becomes far more robust against anomalies. As a result, maintaining a normalized database is generally simpler because each data element exists in only one place, and changes propagate logically through relational links.

Integrity is further enforced through database constraints, such as primary keys, foreign keys, NOT NULL, UNIQUE, and CHECK constraints, along with proper data typing. These mechanisms help ensure only valid, nonconflicting data is stored, preserving referential and domain integrity.

Denormalization

Denormalized intentionally introduce data redundancy to simplify queries and boost performance, especially for analytics, but this trade-off affects data integrity. When the same information, such as customer details, is stored in multiple places (for example, repeated in every order), any update must be made everywhere. A single missed copy can also lead to inconsistencies and potential errors in the data.

Maintaining a denormalized database can be more complex, especially during update operations. Since the same data appears in multiple places, you often need to have extra logic in the application or rely on batch ETL processes—common in data warehouses—to keep everything in sync. In these cases, database-level constraints, such as foreign keys, are often disabled or avoided entirely to improve performance, putting the burden of maintaining data integrity on external processes.

Consider the following simplified SQL example to illustrate the complexity of maintaining consistency in a denormalized schema.

UPDATE order_summary

SET customer_address = '123 Main Street'

WHERE customer_id = 5;

This query must be run across all records where the customer appears. If customer data is spread across multiple tables or storage partitions, additional logic is needed to maintain consistency. In large-scale data warehouses, updates like these are typically handled by batch ETL jobs that reload entire tables or use change data capture (CDC) mechanisms, both of which increase maintenance complexity.

Data warehouses also usually focus more on performance than enforcing strict data rules. As a result, they often don’t have built-in constraints like traditional databases. Instead, they depend on well-designed data validation processes and regular audits to catch and correct issues. However, due to data duplication across records, which increases the amount of data written and makes maintaining consistency more difficult, writes typically take longer.

Write performance and scalability

Normalization

Normalized databases provide fast and efficient write operations, particularly in transactional environments, such as OLTP systems. Since data is split across multiple related tables, each write usually affects only a small part of the database. This reduces contention and lock times. Normalized typically manage growth better from a scalability perspective. Improved scalability, partitioning, and indexing, and reduced storage usage because of less data duplication, are all made possible by the structure of normalized data.

Denormalization

Denormalized databases focus on read performance, often in data warehouses or OLAP systems. However, writes tend to be slower because data is duplicated across records, increasing the amount of data written and the complexity of keeping it consistent. Storage needs also grow faster, which can slow data loading. Despite this, many warehouses accept slower writes, using batch ETL processes instead of frequent small updates.

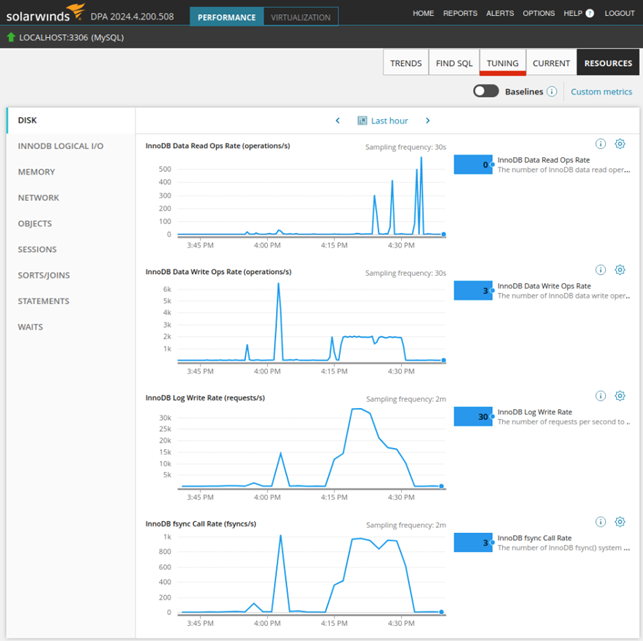

SolarWinds® Database Performance Analyzer enables you to track key metrics, such as disk I/O, InnoDB logical I/O, memory usage, and waits, to evaluate both write performance and scalability. For example, the Disk I/O Throughput chart shown below gives insight into how efficiently the system handles write operations. Meanwhile, the Waits section helps identify if processes are being delayed due to waiting on resources, such as locks or disk access, which can highlight potential bottlenecks.

Screenshot of Database Performance Analyzer, which helps monitor key metrics, such as disk I/O, InnoDB I/O, memory usage, and waits, to identify performance issues.

Use cases

Whether to normalize or denormalize a database typically depends on the specific needs and behavior of the application. Here are some common scenarios that highlight when each approach is most effective.

Normalization

Normalization works best for transactional systems, where data integrity, accuracy, and consistency are key. In these environments, where operations such as inserts, updates, and deletes occur frequently, minimizing data redundancy is crucial for maintaining reliable results and efficient storage. Some common use cases include:

- Banking systems: Normalization helps ensure financial data (e.g., account balances and transaction history) is consistent and accurate across complex relationships.

- Inventory management systems: Normalization enables tracking real-time stock levels, purchases, and sales with high transactional throughput and referential integrity.

- Customer relationship management (CRM): Normalization lets you maintain clean, nonredundant customer and interaction data to support sales and support workflows.

- Enterprise resource planning (ERP): Normalization helps coordinate data across different business functions (finance, HR, procurement, etc.) with a high need for normalization to avoid inconsistencies.

Denormalization

Denormalization works best for read-heavy applications where fast query performance, quick reporting, and easier data retrieval are more important than strict adherence to normalization. These systems typically aggregate data from various sources and optimize it for speedy analysis instead of frequent updates. Some common use cases for denormalization include:

- Data warehouses: Denormalization allows the aggregation of transactional data from various sources for historical analysis and BI.

- BI tools: Prestructured data models enable faster dashboard rendering and analytical queries.

- Reporting systems: Denormalization helps reduce the need for complex joins by storing data in a flatter, more accessible format, improving reporting speed.

- E-commerce analytics: Denormalization supports marketing and operational teams with quick access to sales trends, customer behavior, and conversion rates.

- Log and event management systems: Denormalization enables storing large volumes of read-optimized log data with minimal write updates.

Cloud environment considerations

Most managed services in the cloud, such as Amazon RDS, Microsoft Azure SQL Database, and Google Cloud SQL®, primarily use normalized schemas. These databases are optimized for transactional workloads requiring data integrity, reliability, and consistency. They are suitable for transactional purposes, which demand the correctness of data and reliability, and formal systems, which are of utmost concern in sectors such as banking and finance, logistics, and supply chain management.

Google BigQuery, Amazon Redshift, Snowflake®, and Microsoft Azure Synapse Analytics highlight cloud data warehouses and lakehouses making use of denormalized structures for data. These products are intended to enable reading analytic queries, which is strategically important for retail, marketing, and finance companies, as they execute complex analytical queries at scale and respond with low latency.

Cloud environments help constrain these costs with the use of normalization, especially under pay-as-you-go services. However, this may slow down further operations that involve complex joins. This is particularly true in transactional systems, such as inventory control or CRM and ERP systems, which have low-latency query requirements.

While advocating more storage, denormalization provides superior performance in environments with high query volume. Such is the case with visualization dashboards, real-time analytics, and other similar BI tools. Snowflake and Amazon Redshift platforms provide rich functionality for business use in such environments, as they employ schemas that require minimal joins.

Recommendations

Assign greater precedence to normalization in databases where data consistency, accuracy, and integrity are vitally important, such as in banking, medical, or stock control. Normalized databases remove redundancy and provide uniform data relationships within the system.

Apply denormalization to read-intensive configurations, such as reporting systems, data warehouses, or BI systems, where the benefit of faster reads is worth the extra cost of redundant data and the management of duplicate pieces of information across multiple tables. Denormalized databases can enhance query performance and improve user experience, especially when dealing with massive data sets.

Implement a hybrid approach for most modern applications. Use normalization for transactional processing and denormalization for reporting and analysis to trade off data integrity versus performance, particularly in cloud-native or microservices applications.

Regularly review and adjust your database schema as data sizes and patterns change. Continuous optimization for performance, storage space efficiency, and business needs is required to support long-term scalability and maintainability.

Last thoughts

Both normalization and denormalization are important in schema design, but each applies better to a different type of need, depending on the use case. In transactions such as banking, inventory management, and ERP platforms, accuracy, redundancy control, and storage over efficiency are preferred; therefore, normalization is usually used. Denormalization is the complete opposite and is preferred in systems with heavy-read loads, such as data warehouses and BI tools, where data retrieval speed is the priority.

Either one of these methods, or a mix of the two, can be chosen based on the system's usage, importance of performance, and the degree of data consistency that can be tolerated. An evolving system can benefit from periodic assessments and changes to the database schema to help ensure the balance between performance, precision, and scalability.

Performance issues can be monitored quickly using tools such as SolarWinds Database Performance Analyzer, which provides access to real-time information regarding overall database performance. This enables easier detection of issues, optimization of system queries, and consistent upkeep for optimal system performance.