O11y: Best Practices, Examples, and Tutorial

Introduction

Observability is commonly abbreviated as o11y, and is a composite strategy that monitors a service’s performance, availability, and quality, and how these aspects affect other system components.

So, is observability different from the practice of simply monitoring systems and applications? We must answer this question in the context of the history of application architecture. Even though some practitioners use the terms interchangeably, o11y takes a modern approach to monitoring.

Client-server architecture relied primarily on monitoring system metrics such as CPU and memory during the days when physical servers hosted monolithic applications. As application architecture transitioned from the client-server model to microservices, the priority shifted from monitoring systems (CPU and memory) to monitoring services (latency and error rate). While this transition unfolded, new technologies allowed systems administrators to index and search terabytes of system and application logs distributed throughout their infrastructure and trace a single transaction through the application tiers.

The industry needed a new name to distinguish monitoring services using new technologies from the old practice of monitoring infrastructure usage metrics. This is how the term o11y gained prominence, particularly in the context of complex, distributed systems. However, this evolution doesn’t mean that monitoring infrastructure systems is no longer required; it means that the two paradigms must work in tandem.

This article discusses the three key pillars of observability: the purpose they serve in observing distributed systems, use cases, and popular open-source tools. We also consider various recommended practices for the efficient administration of an o11y framework.

Key Pillars of O11y

Observability relies on key insights to determine how the system works, why it behaves the way it does, and how changes can be made to improve its performance. These insights collectively help describe inherent issues with system performance and are categorized as the three pillars of observability.

Key Pillar | Description | Sample Types | Popular Open-Source Tools |

Metrics | A metric is a numeric representation of system attributes measured over a period of time. Metrics record time-series data to detect system vulnerabilities, analyze system behavior, and model historical trends for security and performance optimization. | • CPU utilization | Prometheus |

Event Logs | A log is an immutable record of an event that occurs at any point in the request life cycle. Logs enable granular debugging by providing detailed insights about an event, such as configuration failures or resource conflicts among an application’s components. | • Disk space warnings | ELK Stack |

Distributed Traces | A trace is a representation of the request journey through different components within the application stack. Traces provide an in-depth look into the program’s data flow and progression, allowing developers to identify bottlenecks for improved performance. | • OS instrumentation traces | Jaeger |

Administering Observability With key Pillars

The pillars of observability are essential indicators that measure external outputs to record and analyze the internal state of a system. This information can be used to reconstruct the state of the application’s infrastructure with enough detail to analyze and optimize software processes.

Metrics

Metrics are numerical representations of performance and security data measured in specific time intervals. These time-series data representations are derived as quantifiable measurements to help inspect the performance and health of distributed systems at the component level. Site reliability engineers (SREs) commonly harness metrics with predictive modeling to determine the overall state of the system and correlate projections for improving cluster performance. Since numerical attributes are optimized for storage, processing, compression, and retrieval, metrics allow for longer retention of performance data and are considered ideal for historical trend analysis.

Factors to Consider Before Using Metrics for Observing Distributed Systems

- First, consider the amount of data a system generates and whether it is possible to collect and process the data in a timely manner. If not, then using metrics can help provide a more accurate picture. For instance, collecting granular data can help resolve issues quickly, but it may also impact the system’s storage and processing. To avoid this, it is recommended to only capture data that will give you valuable insights over time instead of collecting and hoarding data that will never be used.

- Another factor to consider is the level of granularity required for the observability metric. For example, if you are trying to detect problems with a specific component of the system, you will need metrics specific to that component. On the other hand, if you are trying to get a general sense of how the system is performing, then less specific metrics, such as system metrics for garbage collection or CPU usage, may be sufficient.

- Finally, it is important to assess the duration for which you need data for observability purposes. In some cases, real-time data may be necessary to quickly detect and fix problems. In other cases, it may be sufficient to collect data over longer periods of time to build up trends and identify potential issues. For example, if you’re monitoring CPU utilization, a sudden spike could indicate that something is wrong, but if you’re monitoring memory usage, it might not be as significant.

Limitations of Metrics

- Metrics are system-scoped, meaning they can only track what is happening inside a system. For example, an error rate metric can only track the number of errors generated by the system and does not give insights that may help determine why the errors are occurring. This is why log entries and traces are used to complement metrics and help isolate the root causes of problems.

- Using metrics labels with high cardinality affects system performance. A typical example of this is a distributed, microservice architecture that can result in millions of unique time-series telemetry data elements. In such instances, high metrics cardinality can quickly overwhelm the system by processing and storing enormous amounts of data.

Using Prometheus for Metrics Collection and Monitoring

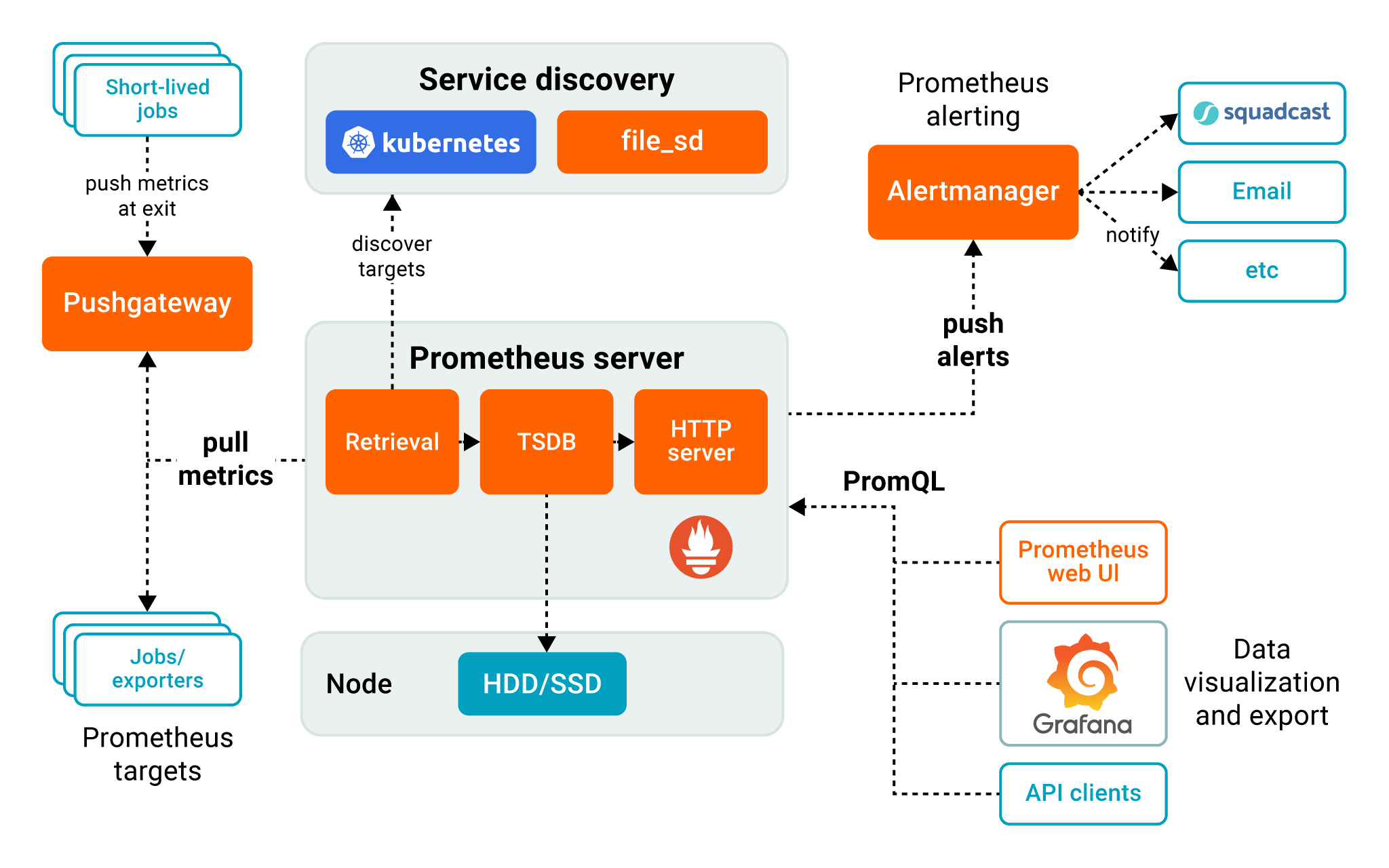

- Prometheus is a popular open-source alerting platform that collects metrics as time-series data. The toolkit uses a multi-dimensional data model that identifies metrics data using key-value pairs. Prometheus also supports multiple modes for graphing and dashboarding, enhancing observability through metrics visualization.

- Prometheus is used across a wide range of system complexities and use cases, including operating system monitoring, metrics collection for containers, distributed cluster monitoring, and service monitoring.

Prometheus components (Image source)

Key Features of Prometheus Include the Following:

- Autonomous single server nodes, eliminating the need for distributed storage

- Flexible metrics querying and analysis through the PromQL query language

- Deep integration with cloud-native tools for holistic observability setup

- Seamless service discovery of distributed services

Event Logs

A log is an unchangeable, timestamped record of events within a distributed system of multiple components. Logs are records of events, errors, and warnings the application generates throughout its life cycle. These records are captured in plain text, binary, or JSON files as structured or unstructured data, and include contextual information associated with the event (such as a client endpoint) for efficient debugging.

Since failures in large-scale deployments rarely arise from one specific event, logs allow SREs to start with a symptom, infer the request life cycle across the software, and iteratively analyze interactions among different parts of the system. With effective logging, SREs can determine possible triggers and components involved in a performance or reliability issue.

Factors to Consider Before Using Event Logs for Observing Distributed Systems

- The first consideration is the type of system you are operating: Event logs may be more useful in some systems than others. For example, they may be more helpful in a system with numerous microservices, where it can be difficult to understand the relationship between events occurring in different services.

- Another factor is the type of event you are interested in observing. Some events may be more important than others and warrant closer scrutiny via event logs. For example, if you are interested in understanding how users are interacting with your system, then events related to user interaction (such as login attempts) would be more important than other event types.

- Finally, consider the scale of your system when deciding when to use event logs for observability. A large and complex system may generate a huge volume of events, making it impractical and financially unviable to log them all. In such cases, identify the most critical events and focus on only logging those.

Limitations of Logs

- Archiving event logs requires extensive investment in storage infrastructure

- Log files only capture information that the logging toolkit has been configured to record

- Due to a lack of appropriate indexing, log data is difficult to sort, filter, and search

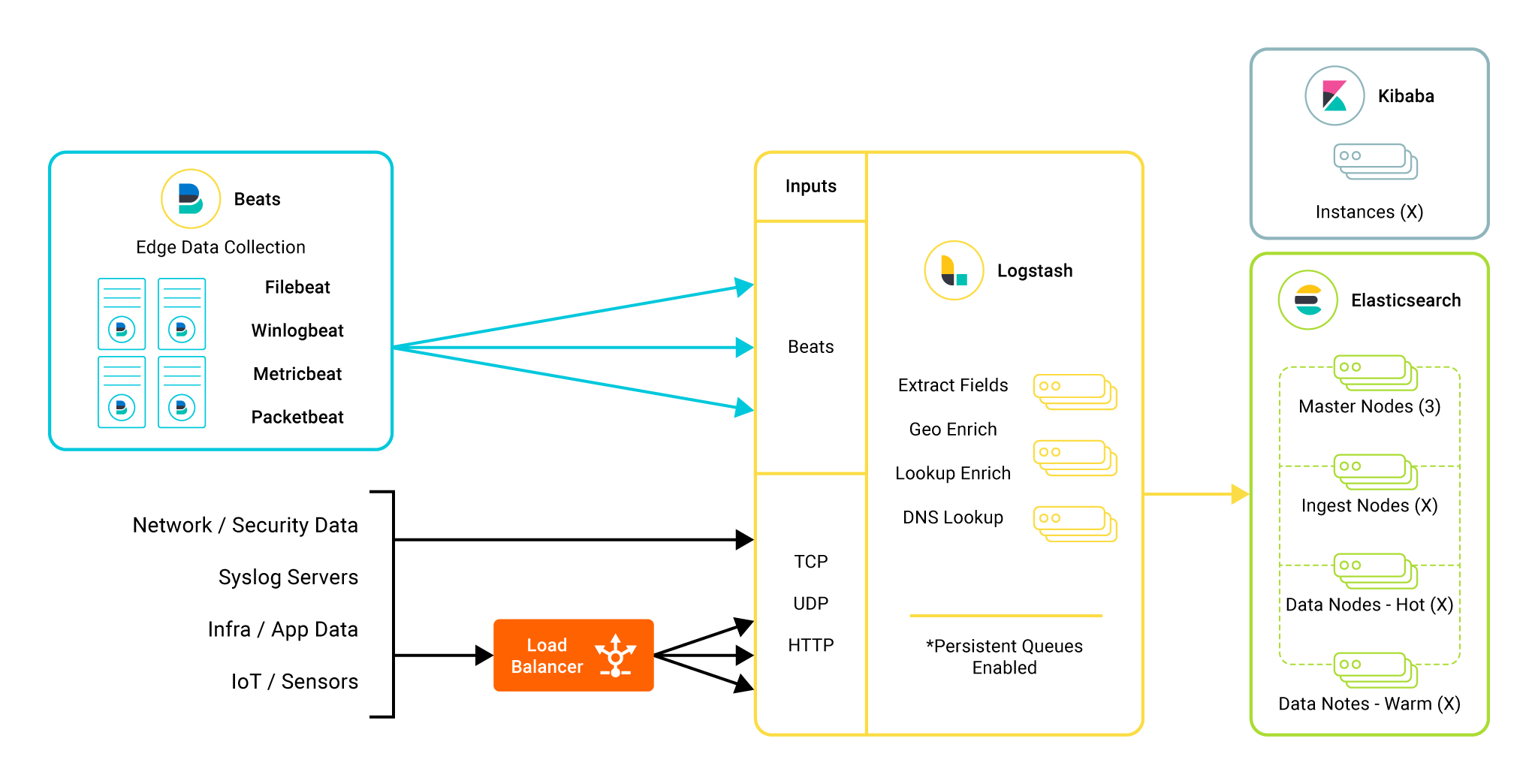

Using the ELK Stack for Logging

Popularly known as the Elastic Stack, the ELK Stack enables SREs to aggregate logs from distributed systems then analyze and visualize them for quicker troubleshooting, analysis, and monitoring. The stack consists of three open-source projects with initials that spell the acronym ELK:

- ElasticSearch: A NoSQL search and analytics engine that enables the aggregation, sorting, and analysis of logs

- LogStash: An ingestion tool that collects and parses log data from disparate sources of a distributed system

- Kibana: A data exploration and visualization tool enabling a graphical review of log events

Elastic Stack components (Image source)

While each of the tools can be used independently, they are commonly used together to support use cases that require log monitoring for actionable insights. Some popular use cases of the ELK Stack include application performance monitoring (APM), business information analytics, security and compliance administration, and log analysis.

Key Features of the ELK Stack Include the Following:

- A highly available distributed search engine supports near real-time searches

- Enabling real-time log data analysis and visualization

- Native support for several programming languages and development frameworks

- Multiple hosting options

- Centralized logging capabilities

Distributed Traces

A trace represents a request as it flows through various components of an application stack. Traces record how long it takes a component to process a request and pass it to the next component, and offers insights into the root causes that trigger errors. A trace enables SREs to observe the structure of a request and its path. A trace in distributed systems allows for the complete analysis of a request’s life cycle making it possible to debug events spanning multiple components. Traces can be used for monitoring performance, understanding bottlenecks, finding errors, and diagnosing problems. They can validate assumptions about a system’s behavior and generate hypotheses about potential problems.

Factors to Consider Before Using Traces to Observe Distributed Systems

The decision to use tracing, and the extent of implementation depends on a variety of factors specific to each system.

- Diligently analyze the size and complexity of the system, number of users, types of workloads being run, and how often changes are made to the system. In general, it’s advisable to use tracing whenever possible, as it can provide invaluable insights into the workings of a distributed system.

- While determining the target endpoints is crucial, traces can be expensive to collect and store. Check whether the benefits outweigh the costs. Traces can add significant overheads to a system, and can impact overall performance.

- Consider the privacy implications of collecting and storing traces. In some cases, traces may contain sensitive information about users or systems that should not be publicly accessible.

Trace Limitations

- All components in the request path should be individually configured to propagate trace data

- There’s complex overhead involved in configuring and sampling

- Tracing is costly and resource-intensive

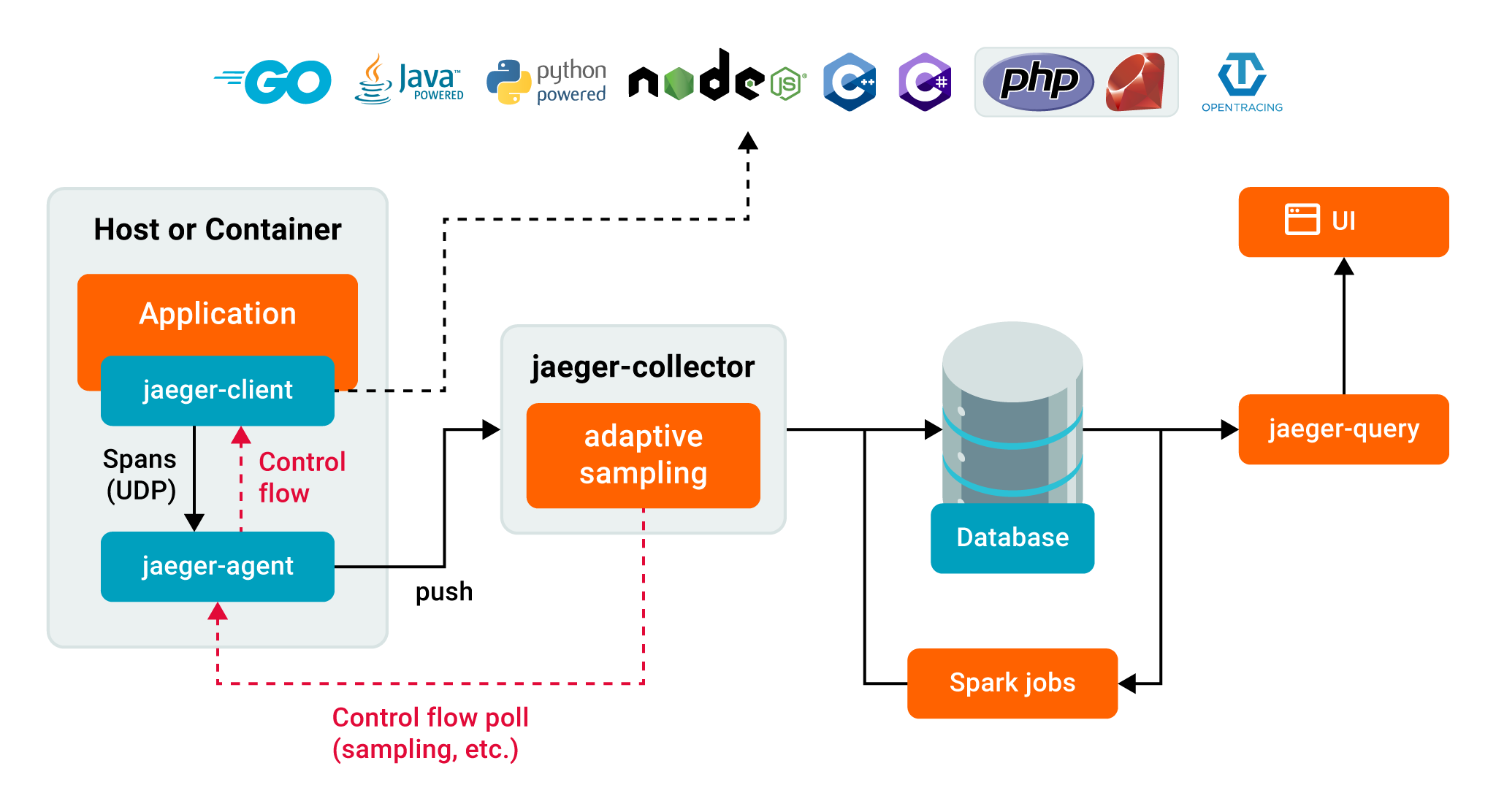

Jaeger for Distributed Tracing

Jaeger is an open-source tracing tool used to monitor and trace transactions among distributed services. Jaeger supports several languages using its client instrumentation libraries based on OpenTracing APIs, including Go, Java, Node.js, Python, C++, and C#.

While Jaeger offers in-memory storage for testing setups, SREs can connect the trace data pipeline to either Elasticsearch or Cassandra for back-end storage. The Jaeger console is a feature-rich user interface that allows SREs to visualize distributed traces and develop dependency graphs for end-to-end tracing of microservices and other granular components of distributed systems. Popular Jaeger use cases include distributed transaction monitoring, root cause analysis, distributed content propagation, and performance optimization of containers.

Key Features of Jaeger Include the Following:

- A feature rich user interface

- Easy installation and use

- Flexibility in configuring the storage back end

Using Logs, Metrics, and Traces Together in O11y

Distributed systems are inherently complex and often rely on different moving parts working together. While logs, metrics, and traces serve unique purposes for o11y, their goals often overlap; they’re best used together for comprehensive visibility.

In most cases, issue identification starts with observing metrics, which helps detect the occurrence of an event impacting system performance or compromising security. Once the event is detected, logs help by providing detailed information about the cause of the event by reading errors, warnings, or exceptions generated by endpoints. As system events span multiple services, tracing is used to identify the components and paths responsible for, or affected by, the event. This practice enables comprehensive visibility, leading to improved system stability and availability.

Best Practices for Comprehensive Observability

Although organizations may choose to adopt different practices to suit their use cases, an o11y framework relies on the following recommended practices for efficiency.

Commit to One Tool for Simpler Management

Centralizing repositories of metrics and log data from various sources helps simplify the observability of distributed systems. With an aggregated repository, cross-functional teams can collaborate to recognize patterns, detect anomalies, and produce contextual analysis for faster remediation.

Optimize log Data

Log data should be optimally organized for storage efficiency and faster data extraction. Besides reducing the time and effort required for log analysis, optimized logs help developers and SREs prioritize metrics needing tracking. Log entries should be formatted for easier correlation. They need to be structured and accessible, and include key parameters to detect component-level anomalies, such as:

- User ID

- Session ID

- Timestamps

- Resource usage

Prioritize Data Correlation for Context Analysis

With an aggregated repository, cross-functional teams can collaborate to recognize patterns, detect anomalies quickly, and produce contextual analysis for faster remediation. The approach offers advanced heuristics, historical context analysis, and pattern recognition capabilities, enabling early detection of issues that could potentially degrade performance.

Use Deployment Markers for Distributed Tracing

Deployment markers are time-based visual indicators of events in a deployed application. Use deployment markers to associate the implications of code change events for efficient visualization of performance metrics and identification of optimizing opportunities. Deployment markers also help implement aggregated logs and component catalogs that act as centralized resources for distributed teams to monitor cluster health and trace root causes.

Implement Dynamic Sampling

Distributed systems produce large amounts of observable data. The collection and retention of that data often leads to system degradation, tedious analysis, and cost inflation. To avoid this, curate the observability data being collected on a set frequency. Curating time-based, sampled data of traces, logs, and metrics helps optimize resource usage while augmenting observability through pattern discovery.

Set Up Alerts for Critical Events

To avoid alert overload so that only meaningful alerts are addressed by site reliability engineers, calibrate alert thresholds that accurately flag system-state events. A severity-based alerting mechanism helps distinguish critical error and warning alerts based on conditional factors, subsequently allowing developers and security professionals to prioritize the remediation of flaws.

Integrate Alerts and Metrics With Automated Incident Response Platforms

Integrating alerts with automated incident response systems is a key DevOps practice that can help improve the efficiency of your organization’s response to critical incidents. By automating the process of routing and responding to alerts, you can help ensure critical incidents are handled timeously and efficiently, keeping all stakeholders updated.

Automated incident response systems like Squadcast can help streamline your organization’s overall response to critical incidents. The platform adds context to alerts and metrics through payload analysis and event tagging. This approach helps eliminate alert overload, expedites remediation, and shortens mean time to repair (MTTR) by leveraging executable runbooks. Squadcast also helps track all configured SLOs to identify service-level breaches and capture post-mortem notes, helping avoid recurring problems in the future.

Closing Thoughts

Observability enhances continuous monitoring by enriching performance data, visualizing it, and providing actionable insights to help solve reliability issues. Beyond monitoring application health, o11y mechanisms measure how users interact with the software, tracking changes and their impacts on the system, enabling developers to fine-tune for easier use and availability, and helping with security.

Observing modern applications requires a novel approach that relies on deeper analysis and aggregating a constant stream of data from distributed services. While it is crucial to inspect the key indicators, it is equally important to adopt the proper practices and efficient tools that support o11y to collectively identify what is happening within a system, including its state, behavior, and interactions with other components.