IT Reliability Versus Availability: Examples and Tutorial

Introduction

Reliability is the likelihood of a system or application service failing, whereas availability describes the percentage of time a system or application is expected to operate normally. Reliability is typically defined by a metric known as mean time between failure (MTBF) expressed in minutes, hours, or days; in contrast, availability is represented by a percentage such as 99.9%.

Concept | Definition | Metric | Example |

Reliability | The likelihood of a service failing

| MTBF | 20 days, 10 hours, and 12 minutes |

Availability | The percentage of time a service is operational | Percentage | 99.90% |

Measuring availability, to define the state of a simple website, is sufficient and commonplace. However, other measurements, such as latency and error rate, must augment availability to define service level indicators (SLI) for more complex applications that have nuances beyond a state of up or down.

This complexity arises because application services contain multiple features that rely on independent systems accessed from various computing platforms in disparate regions. In this context, service level objectives (SLO) become a proxy for an application’s utility to its end-users and are calculated based on SLI.

Example of Service | Typical Measurements of Service Quality |

Static websites | Availability and reliability |

Software as a service (SaaS) | SLI including availability, reliability, but also, latency, and error rate |

This article explains the difference between service level agreements (SLA) and SLO nuances involved in calculating reliability and availability in different scenarios. It also explains why application services require SLI to augment the basic measurements of availability and reliability.

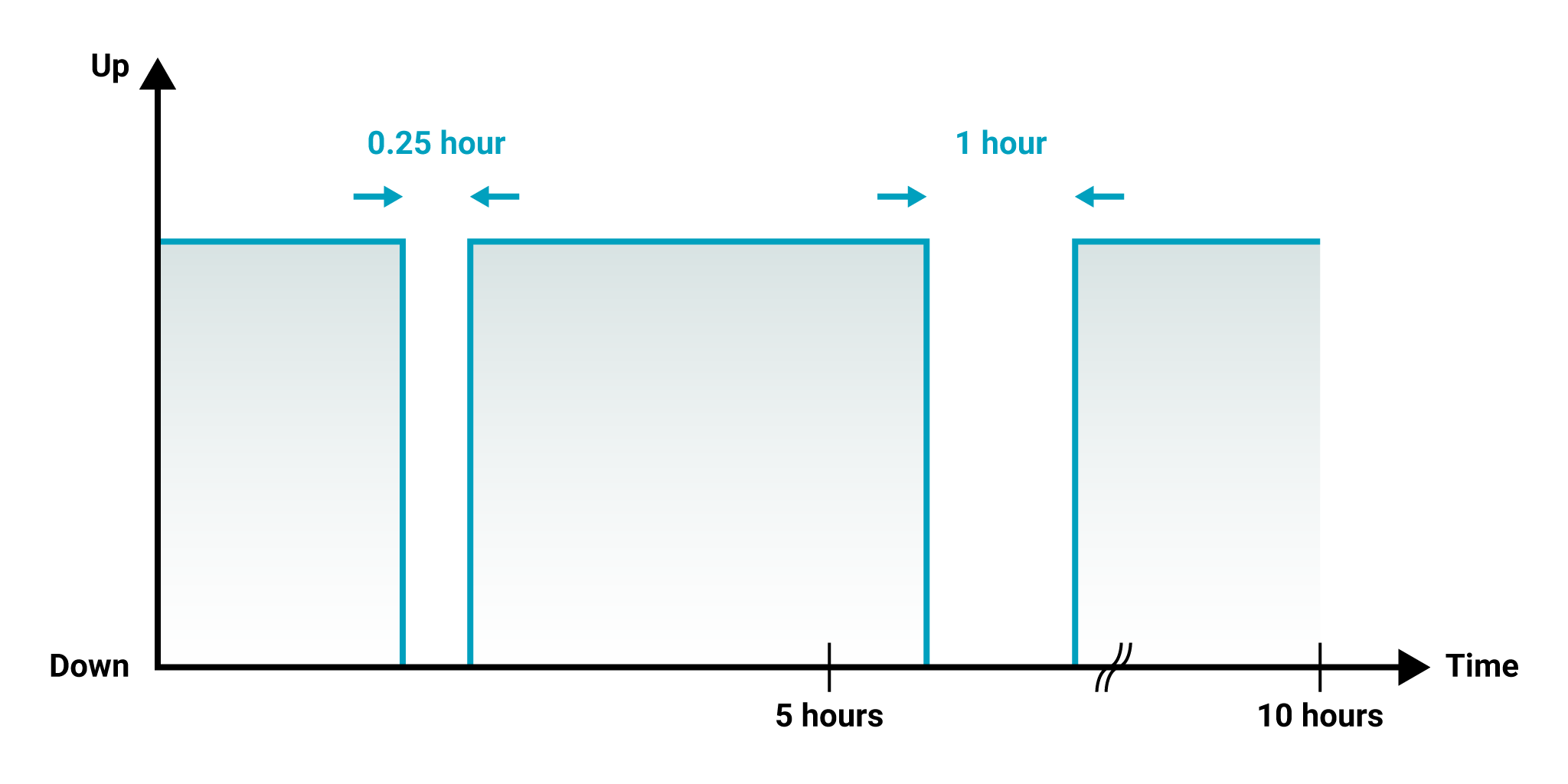

A Simple Example to Distinguish Reliability Versus Availability

Consider a static webpage that can only have binary states, meaning it can be fully available or unavailable at any time. Now let’s assume that during an observation window of 10 hours, the service sustains two periods of downtime. The first outage takes 15 minutes to remedy, while the second lasts one hour.

The availability is the uptime divided by the total time, which includes downtime and uptime. In our example, the calculation works out below.

Availability = Uptime / (Downtime + Uptime)

Availability = 8.75 / (1.25 + 8.75)

Availability = 87.5%

Even though reliability is the probability of an application failure, it’s more common in the site reliability engineering (SRE) community to speak in terms of the passage of time separating two outages. For example, if an application has had approximately one service interruption every month over the past few years, then the probability of it occurring next month is high. However, instead of expressing reliability as a probability value, we would say that the mean time between failures is about 30 days.

The formula for calculating the MTBF is shown below, followed by a calculation using the data from our earlier example:

Mean Time Between Failure = Uptime / Number of incidents

MTBF = 8.75 / 2 = 4.375 hours or 4 hours and 22.5 minutes

Another metric that goes hand in hand with MTBF is mean time to repair (MTTR) a service outage. MTBF primarily depends on the application architecture and the reliability of the sub-services supporting the application. By contrast, MTTR depends on the tools, processes, and skills of the SRE team to fix a problem in real time. The formula is presented below, followed by a calculation using the numbers from our example:

Mean Time To Repair = Downtime / Number of incidents

MTBF = 1.25 / 2 = 0.625 hours or 37.5 minutes

The Impact of MTTR and MTBF on Availability

Even though reliability and availability metrics are interlinked, the availability calculation is influenced only by the collective duration of incidents rather than the number of failures.

For example, 10 incidents lasting one minute each would have far less impact on the availability calculation than one outage lasting two hours.

Using the same 10 hour observation window from our earlier example, the availability in the case of 10 outages lasting one minute would be 98.33%.

10 minutes = 10 minutes / 60 minutes = 0.167 hour

Availability = (10 - 0.167) / 10 hours = 0.9833 or 98.33%

The availability in the case of one incident lasting two hours would be 80%.

Availability = (10 - 2) / 10 hours = 0.8 or 80%

As important as it is to avoid outages when it comes to adhering to signed SLAs based on availability metrics, it’s even more critical to recover from them quickly.

Availability and reliability affect a product’s user experience in different ways. In this example, both scenarios lead to a bad user experience even though the availability in one case is greater than 98%.

A More Complex Example of Service Quality

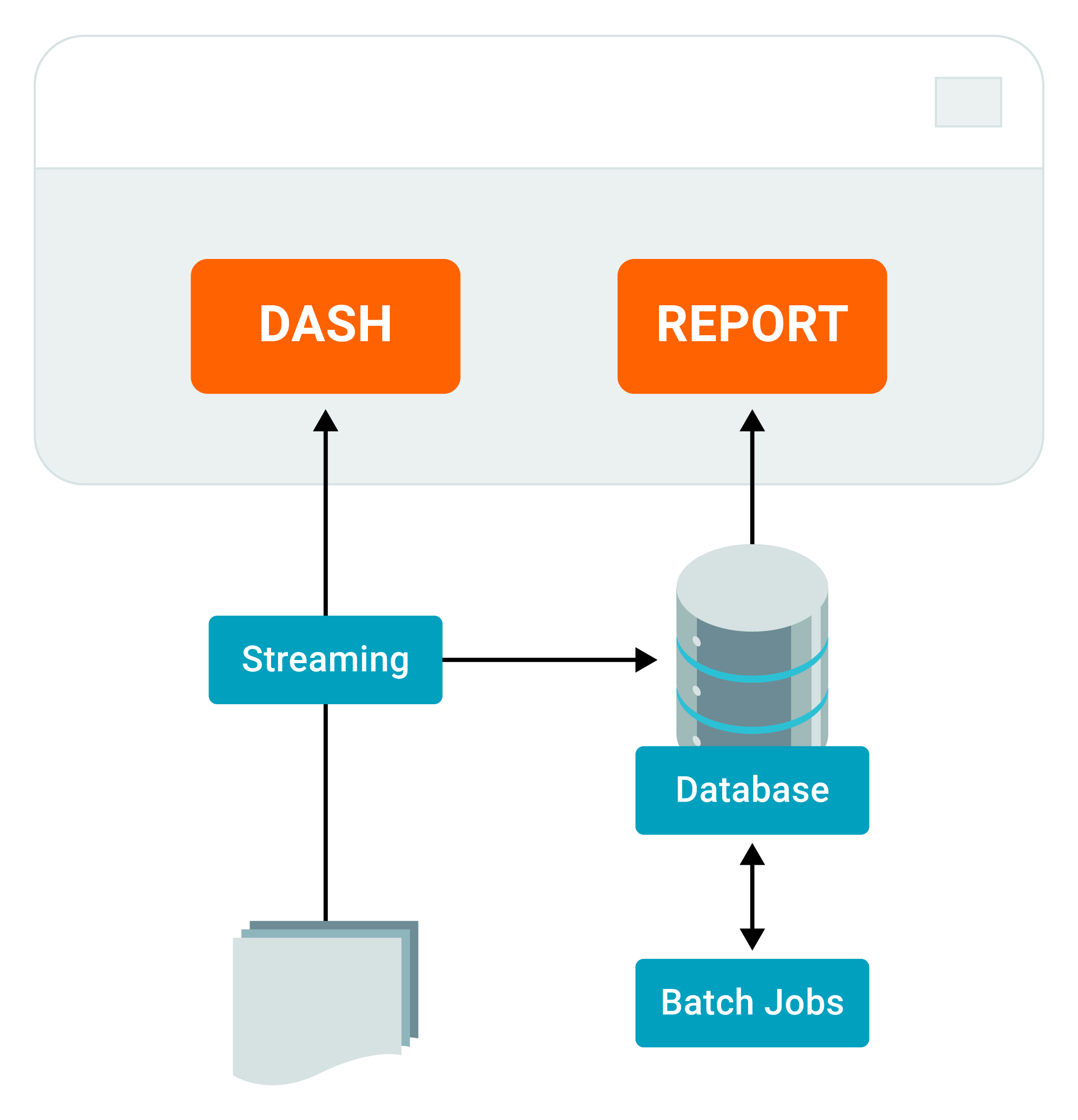

In practice, one application has multiple functionalities supported by different sub-services using independent technology stacks. We can use a simple example to illustrate this concept. Suppose an application has two user interface components. One component displays a real-time dashboard, while the other shows a historical report.

In our example, the real-time dashboard displays data collected from third-party systems and processed by streaming analytics in real-time. Meanwhile, the reporting functionality relies on batch processing jobs executed against a relational database. Even though the two components appear in the same user interface, they have different backend dependencies.

In this scenario, the real-time dashboard will still function if the database suffers an outage, rendering the application partially unavailable. However, the entire application, including the real-time dashboard and historical reporting, won’t be considered available unless both services are up and running.

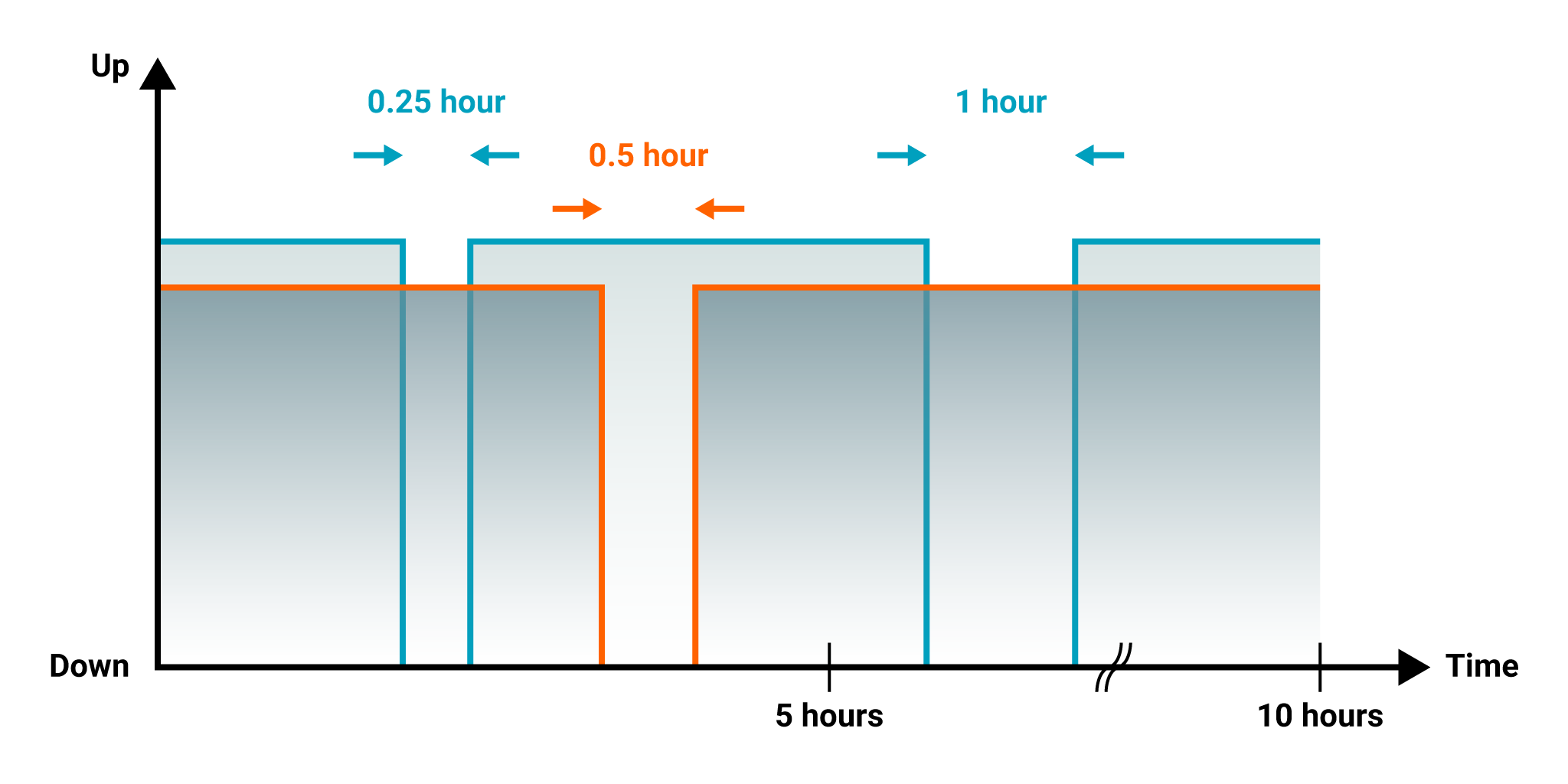

Suppose the dashboard below (shown in red), has two outages , while the reporting functionality has one outage (illustrated in blue).

The total downtime for the combined application made of the real-time dashboard and the historical reporting components would be 1.75 hours. The new calculation for the application downtime would be as follows:

Availability = Uptime / (Downtime + Uptime)

Availability = (10 - 1.75) / (1.75 + 8.25)

Availability = 82.5%

The math for the MTBF must consider all three outages; the new calculation is as follows:

Mean Time Between Failure = Uptime / Number of incidents

MTBF = 8.25 / 3 = 2.75 hours or 2 hours and 45 minutes.

A practical option for handling scenarios where one application has independent areas of functionality would be to measure and report each component’s availability separately.

Measuring More Than Service Availability and Reliability

Systems’ problems affecting application services don’t always result in complete application downtime but instead, cause performance degradation experienced only by users in certain regions or using certain end-user platforms. The following table summarizes factors that further complicate the reliance on availability and reliability metrics to define the quality of an application service.

Type of Service Quality Issues | Example |

Region | Most applications use Content Delivery Networks (CDN) that may suffer outages in a particular global region. |

Platform | A bug may affect only the users of a Chrome browser but not a Firefox browser. |

Performance | A database indexing problem may render an application too slow to use but still technically available. |

Intermittence | Certain problems may affect only a tiny percentage of transactions, making the problem difficult to troubleshoot. |

The service quality problems described in the table above force end-users and service providers to interpret the terms ‘failed’ or ‘available’ differently.

From a service provider's perspective, the service is flawless except for a subset of users in a small region or using a less-common internet browser. Should the service be considered available or unavailable? The answer to that question depends on which user groups you ask.

The more complex an application, the more difficult it is to interpret the meanings of the terms availability and reliability.

Service Level Indicators and Objectives

The SRE community’s answer to untangle the complexity interpreting the definitions of ‘failed’ and ‘available’ is to SLIs. One application service can have multiple SLIs defining a SLO.

For example, a service provider may set an objective to deliver an application programming interface (API) service to its advanced users with a response time of 200 milliseconds for up to 100 requests per second 99.9% of the time with an error rate of less than 0.5%.

Service Level Indicator | Value |

Availability | 99.90% |

Latency | 200 milliseconds |

Request rate | 100 requests per minute |

Error rate | 0.50% |

These additional measurements help service providers set specific expectations for service quality and overcome the over-simplified terms of availability and reliability. SLOs have increased in popularity because of SaaS vendor dependencies on a web of third-party APIs and increased end-user expectations.

A Nuanced Approach to Measuring Service Quality With SLOs

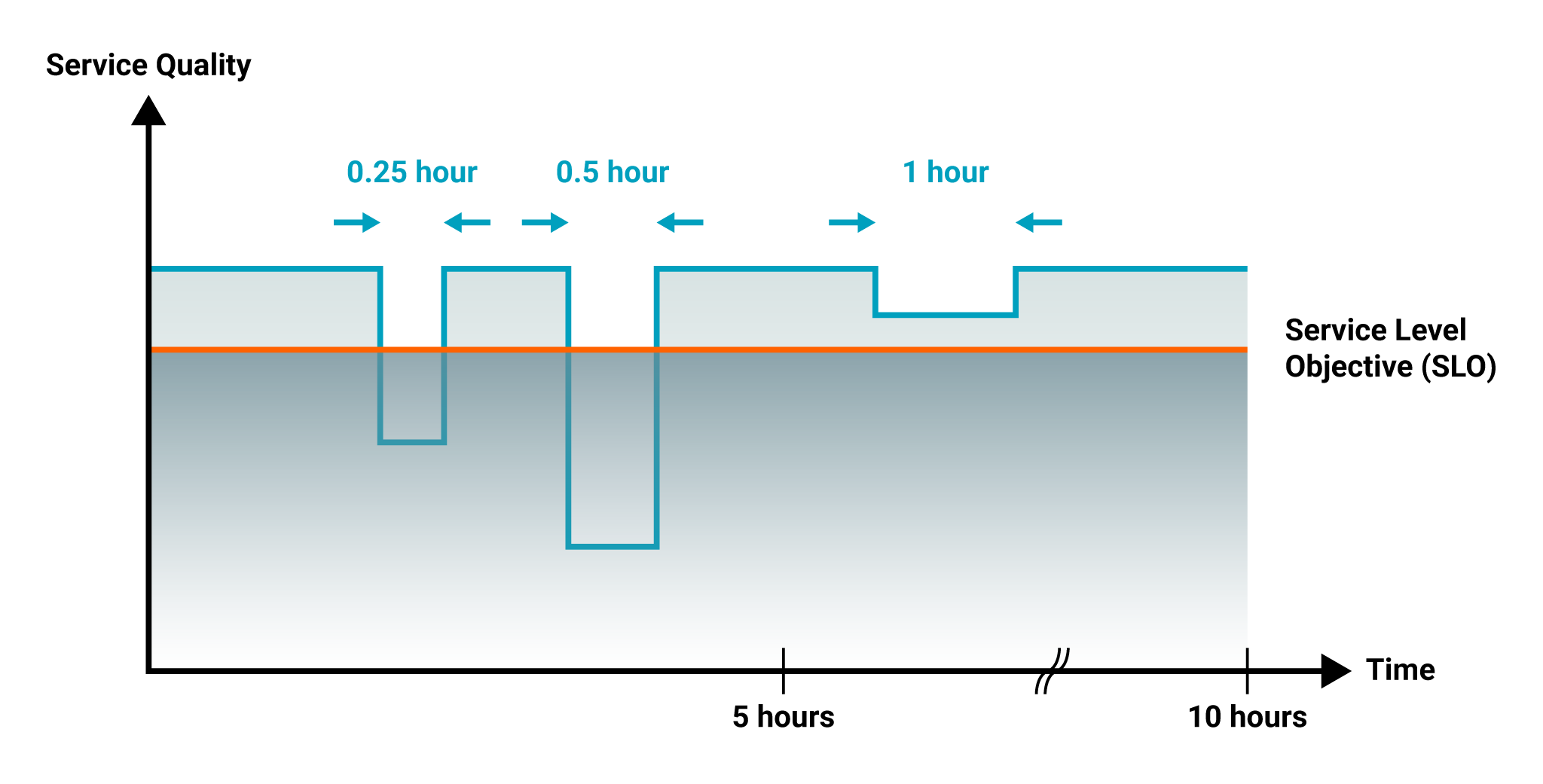

Let’s revisit our original example by superimposing all the complexities covered in our article. We started with a simple example of up and down to explain availability and reliability. We then added multiple application functionalities and degraded service quality concepts.

Now, imagine an application sustains two incidents involving degraded performance, or a partial outage, thereby violating the SLOs covering the application’s overall latency, error rate, or availability. In our example, a third incident occurs later, causing a degraded performance but not to the point of violating any of our preset SLO targets. The diagram below illustrates all these incidents.

In this case, two service degradations breached the target SLO, while the more extended outage did not constitute a violation.

In this updated example, we won’t calculate availability and reliability as we did in the previous examples. Instead, we will define the percentage of time the service operated without violating any of the SLOs.

Percentage of time with no SLO breach = (10 - 0.75) / 10

Percentage of time with no SLO breach = 92.5%

Conclusion

The SRE community defines availability as the percentage of time an application or an infrastructure service is up and running. On the other hand, reliability is the likelihood of a service failure, measured in terms of days, hours, and minutes.

Service providers and customers may differ in their respective interpretations of what consists of a failed or unavailable service. Users experiencing a painfully slow service will be upset even if their experience is confined to a small region and for a subset of the application features.

This complexity has caused SREs to rely on SLOs based on multiple SLIs to help service providers and customers use multi-dimensional criteria to agree on what constitutes the utility of an application to its users.