What Is Distributed Tracing?

Distributed tracing is vital for managing the performance of applications that use microservices and containerization.

What Is Distributed Tracing?

Distributed Tracing Definition

Distributed tracing is a method to help engineering teams with application monitoring, especially applications architected using microservices. It helps pinpoint issues and identify root causes to address failures and suboptimal performance.

In an application consisting of several microservices, a request may require invoking multiple services. Accordingly, failure in one service may trigger failures in others. To clearly understand how each service performs in handling a request, distributed tracing tracks the request end-to-end and assigns a unique Trace ID to identify the request and its associated trace data. In general, this is achieved by adding instrumentation to the application code or by deploying auto-instrumentation in the application environment.

What Is a Trace?

A trace represents how a request spans various services within an application. A request can result from an end user's action or due to an internal trigger, such as a scheduled job.

A trace is a collection of one or more spans. A span represents a unit of work done between two services and includes request and response data, timespan, and metadata such as logs and tags. Spans within a trace also have parent-child relationships representing how various services contributed to processing a request.

Distributed tracing also helps identify common paths in serving a request and the services most critical to the business. Using this information, an organization can deploy additional resources to isolate essential services from scenarios that can result in disruption.

Core Concepts of Distributed Tracing and Terminology

Distributed tracing is a method for monitoring and understanding the performance and behavior of complex, distributed systems, especially those built using microservices.

Spans

A span is a fundamental unit of work in a trace. It represents a single operation or a segment of the request's journey. Each span has a name, start time, duration, and metadata (such as tags and logs) that provide context about the operation. Spans can be nested within each other to show the hierarchical relationship between operations. For example, a span for a database query might be nested within a span for an API call.

Transactions

A transaction is a higher-level concept that represents a complete business operation or user action. It can consist of multiple traces and spans. For instance, a transaction might be a user placing an order, which involves multiple API calls, database queries, and other operations. Transactions help in understanding the end-to-end performance and behavior of a specific user action.

Trace Context

A trace context is a set of data propagated through the system to maintain the continuity of a trace. It includes identifiers such as Trace ID, Span ID, and Parent Span ID. These identifiers are passed along with the request as it moves from one service to another, allowing the tracing system to correlate and reconstruct the entire trace. Trace context is crucial for ensuring all spans within a trace are linked correctly.

This is how they work together:

- Traces provide a comprehensive view of a request's journey.

- Spans break down the trace into individual operations, each with its own start and end time.

- Transactions group related traces and spans to represent a complete user action.

- Trace context ensures all spans are correctly linked and can be reconstructed into a coherent trace.

Understanding these concepts is essential for effectively using distributed tracing to diagnose and optimize the performance of modern distributed applications.

OpenTelemetry

OpenTelemetry (OTel) is a vendor-neutral, open-source standard for instrumenting, generating, collecting, and exporting telemetry data (traces, metrics, and logs).

It unifies how applications are instrumented, allowing developers to write tracing code once using the OTel software development kits and APIs. This instrumentation can then be used to send data to any compatible back end (e.g., Jaeger, Zipkin, or commercial tools such as SolarWinds), preventing vendor lock-in and ensuring consistency across polyglot (multi-language) microservice environments.

How Does Distributed Tracing Work?

Distributed tracing works by tracking a single user request as it travels through every service and component in a distributed application, collecting detailed timing and metadata at each step. This allows you to reconstruct the request's entire journey, providing a holistic view of the system's performance.

This process relies on three fundamental concepts: traces, spans, and context propagation.

1. Instrumentation and Request Initiation

The first step in distributed tracing is to instrument your application code. This involves adding libraries or code that automatically or manually records data whenever an operation begins and ends.

When a user request (such as clicking a button or loading a page) first enters the system, the tracing tool assigns it a globally unique identifier, called the Trace ID.

It also creates the initial unit of work, known as the root span.

2. The Anatomy of a Trace

The entire journey of a request is referred to as a trace. It is a tree structure composed of multiple spans.

Span: A span is the fundamental unit of work, representing a single operation within a service (e.g., an HTTP GET request, a database query, or a specific function call).

Each span records crucial information:

- Start and End Timestamps: They are used to calculate the duration of the operation.

- Span ID: It is a unique ID for that specific operation.

- Parent Span ID: It is the ID of the span that called it, which is essential for building the parent-child relationship tree.

- Attributes (Tags): These are key-value pairs with contextual data, such as the service name, endpoint URL, HTTP status code, or customer ID.

3. Context Propagation

The critical mechanism that links all individual spans, even across service boundaries, is context propagation.

When a service receives a request (a parent span) and needs to call another service (to create a child span), it must pass the trace context along with the network request.

The trace context is typically injected into the request's HTTP headers (often using a standard such as W3C Trace Context). This context includes the unique Trace ID and the Parent Span ID of the calling service.

When the next service receives the request, its instrumentation library extracts this context from the headers. It then uses the received Trace ID to ensure the new span belongs to the same trace and the Parent Span ID to link the new span correctly to the trace tree.

4. Data Collection, Storage, and Analysis

As the request completes its journey across various microservices and processes, the following operations occur:

Collection: Each service sends its completed span data to a central tracing back end (often called a Collector or Agent).

Storage and Reconstruction: The back end aggregates raw spans using the shared Trace ID and the parent-child relationships defined by the Span IDs to reconstruct the end-to-end trace.

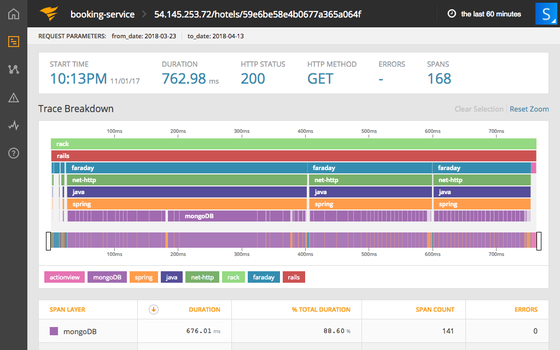

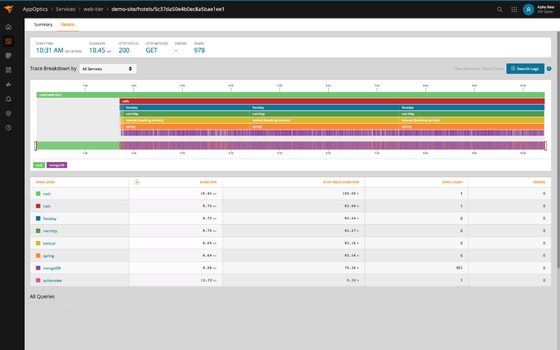

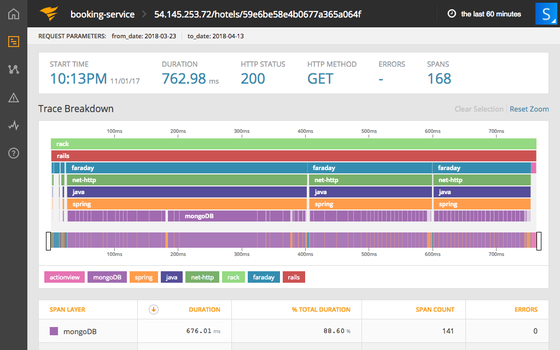

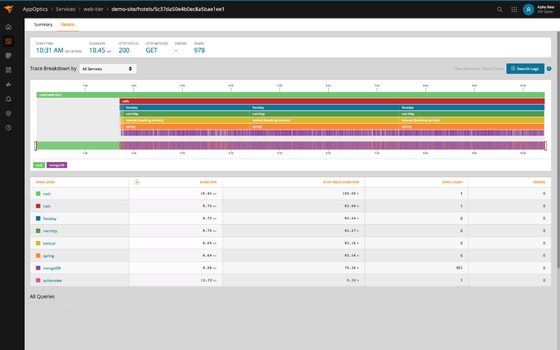

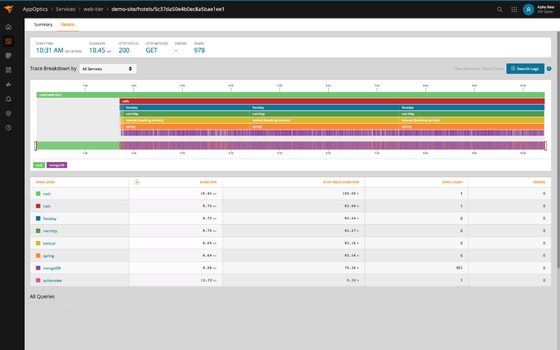

Visualization: The tracing tool then visualizes the trace, usually in a waterfall or flame graph format. This visualization shows the request path, highlighting the time spent in each service and operation.

By visualizing the data, engineers can quickly identify which service or operation is causing high latency (a bottleneck) or where an error is originating, significantly reducing the time needed for troubleshooting and debugging.

Distributed Tracing versus Logging

While distributed tracing tracks each request and its interaction with services and components in the application environment, logging continually captures the state of a service, component, or host machine. However, logging is specific to each service or host machine and can generate substantial amounts of data. Generally, log management tools gather logs from various sources and use structured logging to make it easier to sift through the data. On the other hand, distributed tracing identifies where the issue is, but it might not necessarily provide enough insight to understand the problem in depth. In such cases, log data can help you dig deeper into the problem as it provides more granular data. This is also why some application performance monitoring (APM) tools attach relevant log data to traces.

Benefits of Distributed Tracing

Distributed tracing is a common feature among some of the best APM tools. APM tools drive the following benefits using distributed tracing:

- Visibility: Distributed tracing provides end-to-end visibility into the application environment. Some APM tools leverage distributed tracing to visually represent service dependencies and the overall application environment. This is especially beneficial if an application comprises hundreds of microservices running across multiple data centers and availability regions. As the number of services and infrastructure components increases, it becomes challenging to manage, maintain, and track their contribution to the application environment. Visualizing application environments brings clarity to the chaos and helps quickly identify the services responsible for problems.

- Performance: As the request and response times of each service are tracked, it becomes easier to understand performance and then scale or troubleshoot only the individual services required to improve overall performance and system health. APM tools also provide in-depth visualization of performance metrics to help identify performance variance and response times across different conditions and determine baseline performance. For example, when a change is applied to the application environment, its performance impact can be benchmarked to analyze systemic effects on overall performance in the future.

- Root Cause Analysis: A distributed application could be serving hundreds of thousands of requests per day; for example, consider a distributed e-commerce application serving millions of customers a day. This produces vast amounts of trace data, and unless the traces are correlated, distributed tracing provides little value. Because an error or issue in one service or component may trigger subsequent failures in other services, analyzing and correlating traces is critical for identifying root causes and fixing problems early. Some APM tools continuously correlate traces and related events to proactively report performance issues and bottlenecks.

Distributed Tracing Tools and Standards

Distributed tracing is a critical tool for monitoring and optimizing the performance of modern distributed systems, particularly those built with microservices. It provides end-to-end visibility, maps request paths across microservices, identifies latency bottlenecks, and isolates performance issues down to the specific service.

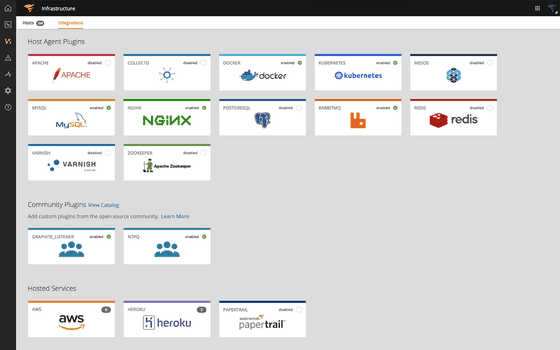

SolarWinds® Observability is a comprehensive solution that unifies metrics, logs, and traces. By integrating these critical data types, teams can gain a complete understanding of their applications' health and performance across hybrid and cloud-native environments.

Key Distributed Tracing Capabilities

- End-to-End Visibility: The platform's core tracing feature automatically ties together the path of an entire request into an interactive trace waterfall view. This visualization is essential for seeing how much time each service spends processing a request, allowing developers and operations teams to quickly identify where latency is introduced.

- Code-Level Diagnostics: SolarWinds goes beyond high-level request tracking by offering deep, code-level insights. Features such as Live Code Profiling enable users to pinpoint performance issues to the exact line of code, while Exception Tracking provides a summary of errors and exceptions in the context of service execution.

- Service Dependency Mapping: SolarWinds solutions automatically visualize the dependencies and relationships between services in a system. This helps teams understand the complex architecture and the impact of changes.

- Contextual and Unified Data Correlation: A key strength of the SolarWinds approach is its ability to accelerate root cause analysis. The system links trace data with application performance metrics and logs. This correlation allows users to jump directly from a slow trace or an error to the relevant logs and metrics from the same time period, reducing mean time to resolution.

- Flexible Deployment: SolarWinds Observability offers deployment flexibility, available as a software as a service solution for simplified cloud-native management and as a self-hosted option to meet the requirements of on-premises and hybrid IT environments.

Complementary Roles in Observability

- Distributed Tracing and Metrics: While metrics provide a high-level overview of system performance, distributed tracing can drill down into specific requests to understand why certain metrics behave the way they do. For example, if you notice a spike in request latency, distributed tracing can help you identify which service or component is causing the delay.

- Distributed Tracing and Logging: Logs provide detailed, low-level information about what is happening in the system, while distributed traces provide a high-level, end-to-end view. By correlating Trace IDs with log entries, you can get a more complete picture of a request's lifecycle and the specific events that occurred.

- Metrics and Logging: Metrics can help you identify when something is wrong, and logs can help you understand why. For example, if a metric shows an increase in error rates, you can use logs to find the specific error messages and stack traces that are causing the issue.

What Is Distributed Tracing?

Distributed Tracing Definition

Distributed tracing is a method to help engineering teams with application monitoring, especially applications architected using microservices. It helps pinpoint issues and identify root causes to address failures and suboptimal performance.

In an application consisting of several microservices, a request may require invoking multiple services. Accordingly, failure in one service may trigger failures in others. To clearly understand how each service performs in handling a request, distributed tracing tracks the request end-to-end and assigns a unique Trace ID to identify the request and its associated trace data. In general, this is achieved by adding instrumentation to the application code or by deploying auto-instrumentation in the application environment.

What Is a Trace?

A trace represents how a request spans various services within an application. A request can result from an end user's action or due to an internal trigger, such as a scheduled job.

A trace is a collection of one or more spans. A span represents a unit of work done between two services and includes request and response data, timespan, and metadata such as logs and tags. Spans within a trace also have parent-child relationships representing how various services contributed to processing a request.

Distributed tracing also helps identify common paths in serving a request and the services most critical to the business. Using this information, an organization can deploy additional resources to isolate essential services from scenarios that can result in disruption.

Core Concepts of Distributed Tracing and Terminology

Distributed tracing is a method for monitoring and understanding the performance and behavior of complex, distributed systems, especially those built using microservices.

Spans

A span is a fundamental unit of work in a trace. It represents a single operation or a segment of the request's journey. Each span has a name, start time, duration, and metadata (such as tags and logs) that provide context about the operation. Spans can be nested within each other to show the hierarchical relationship between operations. For example, a span for a database query might be nested within a span for an API call.

Transactions

A transaction is a higher-level concept that represents a complete business operation or user action. It can consist of multiple traces and spans. For instance, a transaction might be a user placing an order, which involves multiple API calls, database queries, and other operations. Transactions help in understanding the end-to-end performance and behavior of a specific user action.

Trace Context

A trace context is a set of data propagated through the system to maintain the continuity of a trace. It includes identifiers such as Trace ID, Span ID, and Parent Span ID. These identifiers are passed along with the request as it moves from one service to another, allowing the tracing system to correlate and reconstruct the entire trace. Trace context is crucial for ensuring all spans within a trace are linked correctly.

This is how they work together:

- Traces provide a comprehensive view of a request's journey.

- Spans break down the trace into individual operations, each with its own start and end time.

- Transactions group related traces and spans to represent a complete user action.

- Trace context ensures all spans are correctly linked and can be reconstructed into a coherent trace.

Understanding these concepts is essential for effectively using distributed tracing to diagnose and optimize the performance of modern distributed applications.

OpenTelemetry

OpenTelemetry (OTel) is a vendor-neutral, open-source standard for instrumenting, generating, collecting, and exporting telemetry data (traces, metrics, and logs).

It unifies how applications are instrumented, allowing developers to write tracing code once using the OTel software development kits and APIs. This instrumentation can then be used to send data to any compatible back end (e.g., Jaeger, Zipkin, or commercial tools such as SolarWinds), preventing vendor lock-in and ensuring consistency across polyglot (multi-language) microservice environments.

How Does Distributed Tracing Work?

Distributed tracing works by tracking a single user request as it travels through every service and component in a distributed application, collecting detailed timing and metadata at each step. This allows you to reconstruct the request's entire journey, providing a holistic view of the system's performance.

This process relies on three fundamental concepts: traces, spans, and context propagation.

1. Instrumentation and Request Initiation

The first step in distributed tracing is to instrument your application code. This involves adding libraries or code that automatically or manually records data whenever an operation begins and ends.

When a user request (such as clicking a button or loading a page) first enters the system, the tracing tool assigns it a globally unique identifier, called the Trace ID.

It also creates the initial unit of work, known as the root span.

2. The Anatomy of a Trace

The entire journey of a request is referred to as a trace. It is a tree structure composed of multiple spans.

Span: A span is the fundamental unit of work, representing a single operation within a service (e.g., an HTTP GET request, a database query, or a specific function call).

Each span records crucial information:

- Start and End Timestamps: They are used to calculate the duration of the operation.

- Span ID: It is a unique ID for that specific operation.

- Parent Span ID: It is the ID of the span that called it, which is essential for building the parent-child relationship tree.

- Attributes (Tags): These are key-value pairs with contextual data, such as the service name, endpoint URL, HTTP status code, or customer ID.

3. Context Propagation

The critical mechanism that links all individual spans, even across service boundaries, is context propagation.

When a service receives a request (a parent span) and needs to call another service (to create a child span), it must pass the trace context along with the network request.

The trace context is typically injected into the request's HTTP headers (often using a standard such as W3C Trace Context). This context includes the unique Trace ID and the Parent Span ID of the calling service.

When the next service receives the request, its instrumentation library extracts this context from the headers. It then uses the received Trace ID to ensure the new span belongs to the same trace and the Parent Span ID to link the new span correctly to the trace tree.

4. Data Collection, Storage, and Analysis

As the request completes its journey across various microservices and processes, the following operations occur:

Collection: Each service sends its completed span data to a central tracing back end (often called a Collector or Agent).

Storage and Reconstruction: The back end aggregates raw spans using the shared Trace ID and the parent-child relationships defined by the Span IDs to reconstruct the end-to-end trace.

Visualization: The tracing tool then visualizes the trace, usually in a waterfall or flame graph format. This visualization shows the request path, highlighting the time spent in each service and operation.

By visualizing the data, engineers can quickly identify which service or operation is causing high latency (a bottleneck) or where an error is originating, significantly reducing the time needed for troubleshooting and debugging.

Distributed Tracing versus Logging

While distributed tracing tracks each request and its interaction with services and components in the application environment, logging continually captures the state of a service, component, or host machine. However, logging is specific to each service or host machine and can generate substantial amounts of data. Generally, log management tools gather logs from various sources and use structured logging to make it easier to sift through the data. On the other hand, distributed tracing identifies where the issue is, but it might not necessarily provide enough insight to understand the problem in depth. In such cases, log data can help you dig deeper into the problem as it provides more granular data. This is also why some application performance monitoring (APM) tools attach relevant log data to traces.

Benefits of Distributed Tracing

Distributed tracing is a common feature among some of the best APM tools. APM tools drive the following benefits using distributed tracing:

- Visibility: Distributed tracing provides end-to-end visibility into the application environment. Some APM tools leverage distributed tracing to visually represent service dependencies and the overall application environment. This is especially beneficial if an application comprises hundreds of microservices running across multiple data centers and availability regions. As the number of services and infrastructure components increases, it becomes challenging to manage, maintain, and track their contribution to the application environment. Visualizing application environments brings clarity to the chaos and helps quickly identify the services responsible for problems.

- Performance: As the request and response times of each service are tracked, it becomes easier to understand performance and then scale or troubleshoot only the individual services required to improve overall performance and system health. APM tools also provide in-depth visualization of performance metrics to help identify performance variance and response times across different conditions and determine baseline performance. For example, when a change is applied to the application environment, its performance impact can be benchmarked to analyze systemic effects on overall performance in the future.

- Root Cause Analysis: A distributed application could be serving hundreds of thousands of requests per day; for example, consider a distributed e-commerce application serving millions of customers a day. This produces vast amounts of trace data, and unless the traces are correlated, distributed tracing provides little value. Because an error or issue in one service or component may trigger subsequent failures in other services, analyzing and correlating traces is critical for identifying root causes and fixing problems early. Some APM tools continuously correlate traces and related events to proactively report performance issues and bottlenecks.

Distributed Tracing Tools and Standards

Distributed tracing is a critical tool for monitoring and optimizing the performance of modern distributed systems, particularly those built with microservices. It provides end-to-end visibility, maps request paths across microservices, identifies latency bottlenecks, and isolates performance issues down to the specific service.

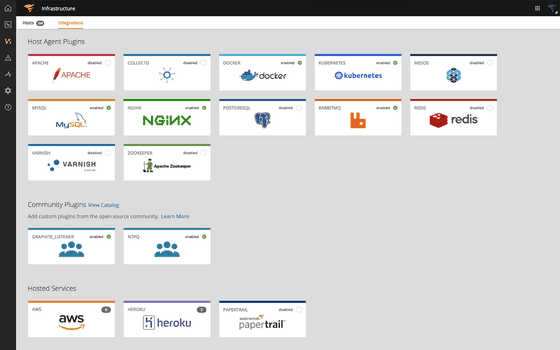

SolarWinds® Observability is a comprehensive solution that unifies metrics, logs, and traces. By integrating these critical data types, teams can gain a complete understanding of their applications' health and performance across hybrid and cloud-native environments.

Key Distributed Tracing Capabilities

- End-to-End Visibility: The platform's core tracing feature automatically ties together the path of an entire request into an interactive trace waterfall view. This visualization is essential for seeing how much time each service spends processing a request, allowing developers and operations teams to quickly identify where latency is introduced.

- Code-Level Diagnostics: SolarWinds goes beyond high-level request tracking by offering deep, code-level insights. Features such as Live Code Profiling enable users to pinpoint performance issues to the exact line of code, while Exception Tracking provides a summary of errors and exceptions in the context of service execution.

- Service Dependency Mapping: SolarWinds solutions automatically visualize the dependencies and relationships between services in a system. This helps teams understand the complex architecture and the impact of changes.

- Contextual and Unified Data Correlation: A key strength of the SolarWinds approach is its ability to accelerate root cause analysis. The system links trace data with application performance metrics and logs. This correlation allows users to jump directly from a slow trace or an error to the relevant logs and metrics from the same time period, reducing mean time to resolution.

- Flexible Deployment: SolarWinds Observability offers deployment flexibility, available as a software as a service solution for simplified cloud-native management and as a self-hosted option to meet the requirements of on-premises and hybrid IT environments.

Complementary Roles in Observability

- Distributed Tracing and Metrics: While metrics provide a high-level overview of system performance, distributed tracing can drill down into specific requests to understand why certain metrics behave the way they do. For example, if you notice a spike in request latency, distributed tracing can help you identify which service or component is causing the delay.

- Distributed Tracing and Logging: Logs provide detailed, low-level information about what is happening in the system, while distributed traces provide a high-level, end-to-end view. By correlating Trace IDs with log entries, you can get a more complete picture of a request's lifecycle and the specific events that occurred.

- Metrics and Logging: Metrics can help you identify when something is wrong, and logs can help you understand why. For example, if a metric shows an increase in error rates, you can use logs to find the specific error messages and stack traces that are causing the issue.

Unify and extend visibility across the entire SaaS technology stack supporting your modern and custom web applications.